kOps (Kubernetes Operations) is an open-source project that helps you create, destroy, upgrade and maintain production-grade, highly available Kubernetes clusters. You can also provision the necessary cloud infrastructure using kOps.

It’s the easiest way to get a production-grade Kubernetes cluster up and running.

kOps is a one-stop solution for managing the cumbersome Kubernetes cluster operations.

kOps provides various features, and some of them are:

- It automates the provisioning of Highly Available Kubernetes clusters

- Supports zero-config managed Kubernetes add-ons

- It can deploy cluster to either on existing virtual private clouds or create a new VPC if not specified

- Capability to add containers, as hooks, and files to nodes via a cluster manifest

- Uses DNS to identify clusters. Automate service discovery and load balancing

- Self-healing: everything runs in Auto-Scaling Groups

- It supports multiple Operating Systems

- It supports High-Availability – please refer to the high_availability.md

- Easy application upgrades and rollbacks

In this tutorial, we will cover the four basic operations:

- Cluster Creation

- Cluster Upgrade

- Cluster Rollback

- Cluster Deletion

Let’s start with creating our first kOps managed Kubernetes cluster.

How to Install kOps

Please follow the below steps to install kOps – I have installed it on my Mac, but you can choose the machine as per your requirement.

Download kOps from the releases package.

$ wget https://github.com/kubernetes/kops/releases/download/1.14.0/kops-darwin-amd64

--2021-06-14 22:30:24-- https://github.com/kubernetes/kops/releases/download/1.14.0/kops-darwin-amd64

Resolving github.com (github.com)... 81.99.162.48

Connecting to github.com (github.com)|81.99.162.48|:443... connected.

HTTP request sent, awaiting response... 200 OK

Length: 123166040 (117M) [application/octet-stream]

Saving to: ‘kops-darwin-amd64’

kops-darwin-amd64

100[==============================================================================================>] 117.46M 6.18MB/s in 19s

2021-06-14 22:30:44 (6.17 MB/s) - ‘kops-darwin-amd64’ saved [123166040/123166040]

$ ls -lrt

total 264192

-rw-r--r--@ 1 ravi staff 123166040 1 Oct 2019 kops-darwin-amd64Give executable permission to the downloaded kOps file and move it to /usr/local/bin/

$ chmod +x kops-darwin-amd64

$ ls

kops-darwin-amd64

$ mv kops-darwin-amd64 /usr/local/bin/kops

$ ls -lrt /usr/local/bin/kops

-rwxr-xr-x@ 1 ravi staff 123166040 1 Oct 2019 /usr/local/bin/kopsRun the kOps version command to verify it

$ kops version

Version 1.14.0 (git-d5078612f)Setup AWS CLI and Kubectl using kOps

The given AWS account must have the following permissions:

- AmazonEC2FullAccess

- AmazonRoute53FullAccess

- AmazonS3FullAccess

- IAMFullAccess

- AmazonVPCFullAccess

You need to set up AWS CLI if you don’t prefer AWS Console solely, and the Kubernetes command-line tool – kubectl allows you to run commands against Kubernetes clusters.

Install AWS CLI by referring to the AWS manual and this article to configure AWS CLI

Then, install kubectl by following the Kubectl manual.

$ curl -LO "https://dl.k8s.io/release/$(curl -L -s https://dl.k8s.io/release/stable.txt)/bin/darwin/amd64/kubectl"

% Total % Received % Xferd Average Speed Time Time Time Current

Dload Upload Total Spent Left Speed

100 154 100 154 0 0 690 0 --:--:-- --:--:-- --:--:-- 687

100 53.2M 100 53.2M 0 0 5569k 0 0:00:09 0:00:09 --:--:-- 5930k

$ chmod +x kubectl

$ mv kubectl /usr/local/bin/kubectlCreate AWS S3 Bucket

Firstly, Create an S3 Bucket using the AWS CLI and pass that to the kops CLI during cluster creation. kOps will save all the cluster’s state information. Use LocationConstraint to avoid any error with the region.

$ aws s3api create-bucket --bucket my-kops-test --region us-east-1

{

"Location": "/my-kops-test"

}

$ aws s3 ls

2021-06-14 23:08:30 my-kops-testIt is strongly recommended to version this bucket if you ever need to revert or recover a previous cluster version.

$ aws s3api put-bucket-versioning --bucket my-kops-test --versioning-configuration Status=EnabledYou can also define the KOPS_STATE_STORE environment variable pointing to the S3 bucket for convenience. The kops CLI then uses this environment variable.

$ export KOPS_STATE_STORE=s3://my-kops-test

$ echo $KOPS_STATE_STORE

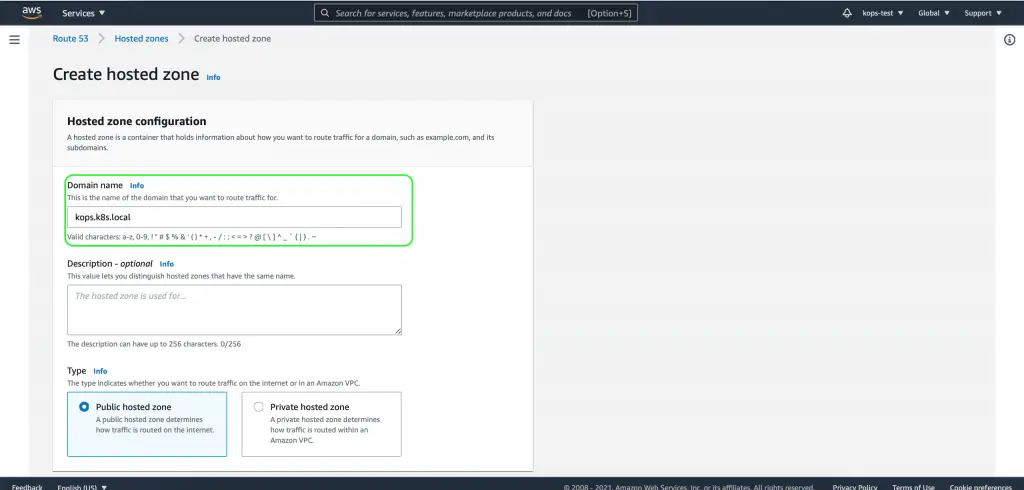

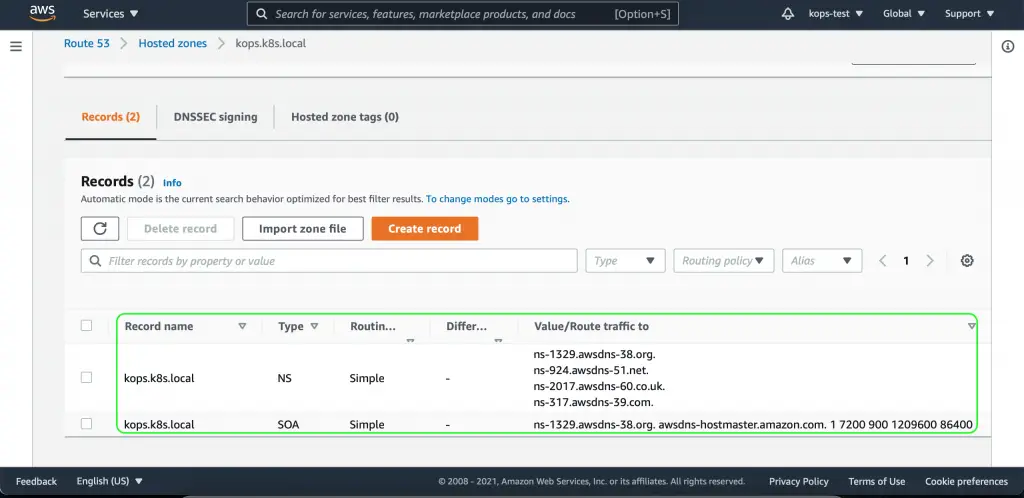

s3://my-kops-testGenerate a Route 53 hosted zone

You can either create the hosted zone with AWS CLI by following the AWS manual, but I am using the AWS console for the change.

Just browse to https://console.aws.amazon.com/route53/v2/hostedzones#CreateHostedZone and create your hosted zone for the kOps cluster.

Generate SSH key for which will be used by kOps for cluster

An SSH public key must be specified when running with AWS by creating a kOps secret. You can either use your ssh key or generate one public key for the kOps cluster. Here I’m creating one for my system, which would be used by kOps.

$ ssh-keygen

Generating public/private rsa key pair.

Enter file in which to save the key (/Users/ravi/.ssh/id_rsa):

Enter passphrase (empty for no passphrase):

Enter same passphrase again:

Your identification has been saved in /Users/ravi/.ssh/id_rsa.

Your public key has been saved in /Users/ravi/.ssh/id_rsa.pub.

The key fingerprint is:

SHA256:XSWIelMnottosharefiS/guznot7I7EdI/PRv/MKYDo [email protected]

The key's randomart image is:

+---[RSA 3072]----+

| ... o .. . |

| .+oo+ o |

| . ..=+= . |

|. o. .o.*. . |

| . o+.8.o5o. |

| .o+o=. |

| E.o=-+ |

| .=.+.oo |

| oo0o o=. |

+----[SHA256]-----+

$ kops create secret --name test.kops.k8s.local sshpublickey admin -i ~/.ssh/id_rsa.pubCreate kOps cluster for kubernetes cluster on AWS

There are multiple parameters for the kOps create cluster command, which you can refer to from the kOps manual, but we will use bare minimum ones.

- –name : Name of the cluster

- –zones: Zone where the cluster instance will get deployed

- –master-size : Set instance size for master(s)

- –node-size : Set instance size for nodes

- –image : Set image for all instances

- –kubernetes-version : Version of kubernetes to run

$ kops create cluster --name test.kops.k8s.local --zones us-east-1a --master-size t2.micro --node-size t2.micro --image ami-0aeeebd8d2ab47354 --kubernetes-version 1.14.0

I0614 23:16:50.377326 10157 create_cluster.go:519] Inferred --cloud=aws from zone "us-east-1a"

I0614 23:16:51.013900 10157 subnets.go:184] Assigned CIDR 172.20.32.0/19 to subnet us-east-1a

Previewing changes that will be made:

*********************************************************************************

A new kubernetes version is available: 1.14.10

Upgrading is recommended (try kops upgrade cluster)

More information: https://github.com/kubernetes/kops/blob/master/permalinks/upgrade_k8s.md#1.14.10

*********************************************************************************

I0614 23:18:45.001501 10193 apply_cluster.go:559] Gossip DNS: skipping DNS validation

I0614 23:18:45.048364 10193 executor.go:103] Tasks: 0 done / 90 total; 42 can run

I0614 23:18:45.967807 10193 executor.go:103] Tasks: 42 done / 90 total; 24 can run

I0614 23:18:46.752548 10193 executor.go:103] Tasks: 66 done / 90 total; 20 can run

I0614 23:18:47.426425 10193 executor.go:103] Tasks: 86 done / 90 total; 3 can run

W0614 23:18:47.531285 10193 keypair.go:140] Task did not have an address: *awstasks.LoadBalancer {"Name":"api.test.kops.k8s.local","Lifecycle":"Sync","LoadBalancerName":"api-test-kops-k8s-local-11nu02","DNSName":null,"HostedZoneId":null,"Subnets":[{"Name":"us-east-1a.test.kops.k8s.local","ShortName":"us-east-1a","Lifecycle":"Sync","ID":null,"VPC":{"Name":"test.kops.k8s.local","Lifecycle":"Sync","ID":null,"CIDR":"172.20.0.0/16","EnableDNSHostnames":true,"EnableDNSSupport":true,"Shared":false,"Tags":{"KubernetesCluster":"test.kops.k8s.local","Name":"test.kops.k8s.local","kubernetes.io/cluster/test.kops.k8s.local":"owned"}},"AvailabilityZone":"us-east-1a","CIDR":"172.20.32.0/19","Shared":false,"Tags":{"KubernetesCluster":"test.kops.k8s.local","Name":"us-east-1a.test.kops.k8s.local","SubnetType":"Public","kubernetes.io/cluster/test.kops.k8s.local":"owned","kubernetes.io/role/elb":"1"}}],"SecurityGroups":[{"Name":"api-elb.test.kops.k8s.local","Lifecycle":"Sync","ID":null,"Description":"Security group for api ELB","VPC":{"Name":"test.kops.k8s.local","Lifecycle":"Sync","ID":null,"CIDR":"172.20.0.0/16","EnableDNSHostnames":true,"EnableDNSSupport":true,"Shared":false,"Tags":{"KubernetesCluster":"test.kops.k8s.local","Name":"test.kops.k8s.local","kubernetes.io/cluster/test.kops.k8s.local":"owned"}},"RemoveExtraRules":["port=443"],"Shared":null,"Tags":{"KubernetesCluster":"test.kops.k8s.local","Name":"api-elb.test.kops.k8s.local","kubernetes.io/cluster/test.kops.k8s.local":"owned"}}],"Listeners":{"443":{"InstancePort":443,"SSLCertificateID":""}},"Scheme":null,"HealthCheck":{"Target":"SSL:443","HealthyThreshold":2,"UnhealthyThreshold":2,"Interval":10,"Timeout":5},"AccessLog":null,"ConnectionDraining":null,"ConnectionSettings":{"IdleTimeout":300},"CrossZoneLoadBalancing":{"Enabled":false},"SSLCertificateID":"","Tags":{"KubernetesCluster":"test.kops.k8s.local","Name":"api.test.kops.k8s.local","kubernetes.io/cluster/test.kops.k8s.local":"owned"}}

I0614 23:18:48.011439 10193 executor.go:103] Tasks: 89 done / 90 total; 1 can run

I0614 23:18:48.197184 10193 executor.go:103] Tasks: 90 done / 90 total; 0 can run

Will create resources:

AutoscalingGroup/master-us-east-1a.masters.test.kops.k8s.local

Granularity 1Minute

LaunchConfiguration name:master-us-east-1a.masters.test.kops.k8s.local

MaxSize 1

Metrics [GroupDesiredCapacity, GroupInServiceInstances, GroupMaxSize, GroupMinSize, GroupPendingInstances, GroupStandbyInstances, GroupTerminatingInstances, GroupTotalInstances]

MinSize 1

Subnets [name:us-east-1a.test.kops.k8s.local]

SuspendProcesses []

Tags {Name: master-us-east-1a.masters.test.kops.k8s.local, KubernetesCluster: test.kops.k8s.local, k8s.io/cluster-autoscaler/node-template/label/kops.k8s.io/instancegroup: master-us-east-1a, k8s.io/role/master: 1}

AutoscalingGroup/nodes.test.kops.k8s.local

Granularity 1Minute

LaunchConfiguration name:nodes.test.kops.k8s.local

MaxSize 2

Metrics [GroupDesiredCapacity, GroupInServiceInstances, GroupMaxSize, GroupMinSize, GroupPendingInstances, GroupStandbyInstances, GroupTerminatingInstances, GroupTotalInstances]

MinSize 2

Subnets [name:us-east-1a.test.kops.k8s.local]

SuspendProcesses []

Tags {k8s.io/cluster-autoscaler/node-template/label/kops.k8s.io/instancegroup: nodes, k8s.io/role/node: 1, Name: nodes.test.kops.k8s.local, KubernetesCluster: test.kops.k8s.local}

DHCPOptions/test.kops.k8s.local

DomainName ec2.internal

DomainNameServers AmazonProvidedDNS

Shared false

Tags {kubernetes.io/cluster/test.kops.k8s.local: owned, Name: test.kops.k8s.local, KubernetesCluster: test.kops.k8s.local}

EBSVolume/a.etcd-events.test.kops.k8s.local

AvailabilityZone us-east-1a

Encrypted false

SizeGB 20

Tags {KubernetesCluster: test.kops.k8s.local, k8s.io/etcd/events: a/a, k8s.io/role/master: 1, kubernetes.io/cluster/test.kops.k8s.local: owned, Name: a.etcd-events.test.kops.k8s.local}

VolumeType gp2

EBSVolume/a.etcd-main.test.kops.k8s.local

AvailabilityZone us-east-1a

Encrypted false

SizeGB 20

Tags {k8s.io/etcd/main: a/a, k8s.io/role/master: 1, kubernetes.io/cluster/test.kops.k8s.local: owned, Name: a.etcd-main.test.kops.k8s.local, KubernetesCluster: test.kops.k8s.local}

VolumeType gp2

IAMInstanceProfile/masters.test.kops.k8s.local

Shared false

IAMInstanceProfile/nodes.test.kops.k8s.local

Shared false

IAMInstanceProfileRole/masters.test.kops.k8s.local

InstanceProfile name:masters.test.kops.k8s.local id:masters.test.kops.k8s.local

Role name:masters.test.kops.k8s.local

IAMInstanceProfileRole/nodes.test.kops.k8s.local

InstanceProfile name:nodes.test.kops.k8s.local id:nodes.test.kops.k8s.local

Role name:nodes.test.kops.k8s.local

IAMRole/masters.test.kops.k8s.local

ExportWithID masters

IAMRole/nodes.test.kops.k8s.local

ExportWithID nodes

IAMRolePolicy/masters.test.kops.k8s.local

Role name:masters.test.kops.k8s.local

IAMRolePolicy/nodes.test.kops.k8s.local

Role name:nodes.test.kops.k8s.local

InternetGateway/test.kops.k8s.local

VPC name:test.kops.k8s.local

Shared false

Tags {Name: test.kops.k8s.local, KubernetesCluster: test.kops.k8s.local, kubernetes.io/cluster/test.kops.k8s.local: owned}

Keypair/apiserver-aggregator

Signer name:apiserver-aggregator-ca id:cn=apiserver-aggregator-ca

Subject cn=aggregator

Type client

Format v1alpha2

Keypair/apiserver-aggregator-ca

Subject cn=apiserver-aggregator-ca

Type ca

Format v1alpha2

Keypair/apiserver-proxy-client

Signer name:ca id:cn=kubernetes

Subject cn=apiserver-proxy-client

Type client

Format v1alpha2

Keypair/ca

Subject cn=kubernetes

Type ca

Format v1alpha2

Keypair/etcd-clients-ca

Subject cn=etcd-clients-ca

Type ca

Format v1alpha2

Keypair/etcd-manager-ca-events

Subject cn=etcd-manager-ca-events

Type ca

Format v1alpha2

Keypair/etcd-manager-ca-main

Subject cn=etcd-manager-ca-main

Type ca

Format v1alpha2

Keypair/etcd-peers-ca-events

Subject cn=etcd-peers-ca-events

Type ca

Format v1alpha2

Keypair/etcd-peers-ca-main

Subject cn=etcd-peers-ca-main

Type ca

Format v1alpha2

Keypair/kops

Signer name:ca id:cn=kubernetes

Subject o=system:masters,cn=kops

Type client

Format v1alpha2

Keypair/kube-controller-manager

Signer name:ca id:cn=kubernetes

Subject cn=system:kube-controller-manager

Type client

Format v1alpha2

Keypair/kube-proxy

Signer name:ca id:cn=kubernetes

Subject cn=system:kube-proxy

Type client

Format v1alpha2

Keypair/kube-scheduler

Signer name:ca id:cn=kubernetes

Subject cn=system:kube-scheduler

Type client

Format v1alpha2

Keypair/kubecfg

Signer name:ca id:cn=kubernetes

Subject o=system:masters,cn=kubecfg

Type client

Format v1alpha2

Keypair/kubelet

Signer name:ca id:cn=kubernetes

Subject o=system:nodes,cn=kubelet

Type client

Format v1alpha2

Keypair/kubelet-api

Signer name:ca id:cn=kubernetes

Subject cn=kubelet-api

Type client

Format v1alpha2

Keypair/master

AlternateNames [100.64.0.1, 127.0.0.1, api.internal.test.kops.k8s.local, api.test.kops.k8s.local, kubernetes, kubernetes.default, kubernetes.default.svc, kubernetes.default.svc.cluster.local]

Signer name:ca id:cn=kubernetes

Subject cn=kubernetes-master

Type server

Format v1alpha2

LaunchConfiguration/master-us-east-1a.masters.test.kops.k8s.local

AssociatePublicIP true

IAMInstanceProfile name:masters.test.kops.k8s.local id:masters.test.kops.k8s.local

ImageID ami-0aeeebd8d2ab47354

InstanceType t2.micro

RootVolumeSize 64

RootVolumeType gp2

SSHKey name:kubernetes.test.kops.k8s.local-83:96:15:36:d5:27:79:ea:42:14:0c:65:58:56:a9:c9 id:kubernetes.test.kops.k8s.local-83:96:15:36:d5:27:79:ea:42:14:0c:65:58:56:a9:c9

SecurityGroups [name:masters.test.kops.k8s.local]

SpotPrice

LaunchConfiguration/nodes.test.kops.k8s.local

AssociatePublicIP true

IAMInstanceProfile name:nodes.test.kops.k8s.local id:nodes.test.kops.k8s.local

ImageID ami-0aeeebd8d2ab47354

InstanceType t2.micro

RootVolumeSize 128

RootVolumeType gp2

SSHKey name:kubernetes.test.kops.k8s.local-83:96:15:36:d5:27:79:ea:42:14:0c:65:58:56:a9:c9 id:kubernetes.test.kops.k8s.local-83:96:15:36:d5:27:79:ea:42:14:0c:65:58:56:a9:c9

SecurityGroups [name:nodes.test.kops.k8s.local]

SpotPrice

LoadBalancer/api.test.kops.k8s.local

LoadBalancerName api-test-kops-k8s-local-11nu02

Subnets [name:us-east-1a.test.kops.k8s.local]

SecurityGroups [name:api-elb.test.kops.k8s.local]

Listeners {443: {"InstancePort":443,"SSLCertificateID":""}}

HealthCheck {"Target":"SSL:443","HealthyThreshold":2,"UnhealthyThreshold":2,"Interval":10,"Timeout":5}

ConnectionSettings {"IdleTimeout":300}

CrossZoneLoadBalancing {"Enabled":false}

SSLCertificateID

Tags {Name: api.test.kops.k8s.local, KubernetesCluster: test.kops.k8s.local, kubernetes.io/cluster/test.kops.k8s.local: owned}

LoadBalancerAttachment/api-master-us-east-1a

LoadBalancer name:api.test.kops.k8s.local id:api.test.kops.k8s.local

AutoscalingGroup name:master-us-east-1a.masters.test.kops.k8s.local id:master-us-east-1a.masters.test.kops.k8s.local

ManagedFile/etcd-cluster-spec-events

Location backups/etcd/events/control/etcd-cluster-spec

ManagedFile/etcd-cluster-spec-main

Location backups/etcd/main/control/etcd-cluster-spec

ManagedFile/manifests-etcdmanager-events

Location manifests/etcd/events.yaml

ManagedFile/manifests-etcdmanager-main

Location manifests/etcd/main.yaml

ManagedFile/test.kops.k8s.local-addons-bootstrap

Location addons/bootstrap-channel.yaml

ManagedFile/test.kops.k8s.local-addons-core.addons.k8s.io

Location addons/core.addons.k8s.io/v1.4.0.yaml

ManagedFile/test.kops.k8s.local-addons-dns-controller.addons.k8s.io-k8s-1.12

Location addons/dns-controller.addons.k8s.io/k8s-1.12.yaml

ManagedFile/test.kops.k8s.local-addons-dns-controller.addons.k8s.io-k8s-1.6

Location addons/dns-controller.addons.k8s.io/k8s-1.6.yaml

ManagedFile/test.kops.k8s.local-addons-dns-controller.addons.k8s.io-pre-k8s-1.6

Location addons/dns-controller.addons.k8s.io/pre-k8s-1.6.yaml

ManagedFile/test.kops.k8s.local-addons-kube-dns.addons.k8s.io-k8s-1.12

Location addons/kube-dns.addons.k8s.io/k8s-1.12.yaml

ManagedFile/test.kops.k8s.local-addons-kube-dns.addons.k8s.io-k8s-1.6

Location addons/kube-dns.addons.k8s.io/k8s-1.6.yaml

ManagedFile/test.kops.k8s.local-addons-kube-dns.addons.k8s.io-pre-k8s-1.6

Location addons/kube-dns.addons.k8s.io/pre-k8s-1.6.yaml

ManagedFile/test.kops.k8s.local-addons-kubelet-api.rbac.addons.k8s.io-k8s-1.9

Location addons/kubelet-api.rbac.addons.k8s.io/k8s-1.9.yaml

ManagedFile/test.kops.k8s.local-addons-limit-range.addons.k8s.io

Location addons/limit-range.addons.k8s.io/v1.5.0.yaml

ManagedFile/test.kops.k8s.local-addons-rbac.addons.k8s.io-k8s-1.8

Location addons/rbac.addons.k8s.io/k8s-1.8.yaml

ManagedFile/test.kops.k8s.local-addons-storage-aws.addons.k8s.io-v1.6.0

Location addons/storage-aws.addons.k8s.io/v1.6.0.yaml

ManagedFile/test.kops.k8s.local-addons-storage-aws.addons.k8s.io-v1.7.0

Location addons/storage-aws.addons.k8s.io/v1.7.0.yaml

Route/0.0.0.0/0

RouteTable name:test.kops.k8s.local

CIDR 0.0.0.0/0

InternetGateway name:test.kops.k8s.local

RouteTable/test.kops.k8s.local

VPC name:test.kops.k8s.local

Shared false

Tags {Name: test.kops.k8s.local, KubernetesCluster: test.kops.k8s.local, kubernetes.io/cluster/test.kops.k8s.local: owned, kubernetes.io/kops/role: public}

RouteTableAssociation/us-east-1a.test.kops.k8s.local

RouteTable name:test.kops.k8s.local

Subnet name:us-east-1a.test.kops.k8s.local

SSHKey/kubernetes.test.kops.k8s.local-83:96:15:36:d5:27:79:ea:42:14:0c:65:58:56:a9:c9

KeyFingerprint 27:f9:ae:b3:f8:4f:22:71:57:8a:2b:09:e2:dd:2d:45

Secret/admin

Secret/kube

Secret/kube-proxy

Secret/kubelet

Secret/system:controller_manager

Secret/system:dns

Secret/system:logging

Secret/system:monitoring

Secret/system:scheduler

SecurityGroup/api-elb.test.kops.k8s.local

Description Security group for api ELB

VPC name:test.kops.k8s.local

RemoveExtraRules [port=443]

Tags {Name: api-elb.test.kops.k8s.local, KubernetesCluster: test.kops.k8s.local, kubernetes.io/cluster/test.kops.k8s.local: owned}

SecurityGroup/masters.test.kops.k8s.local

Description Security group for masters

VPC name:test.kops.k8s.local

RemoveExtraRules [port=22, port=443, port=2380, port=2381, port=4001, port=4002, port=4789, port=179]

Tags {Name: masters.test.kops.k8s.local, KubernetesCluster: test.kops.k8s.local, kubernetes.io/cluster/test.kops.k8s.local: owned}

SecurityGroup/nodes.test.kops.k8s.local

Description Security group for nodes

VPC name:test.kops.k8s.local

RemoveExtraRules [port=22]

Tags {kubernetes.io/cluster/test.kops.k8s.local: owned, Name: nodes.test.kops.k8s.local, KubernetesCluster: test.kops.k8s.local}

SecurityGroupRule/all-master-to-master

SecurityGroup name:masters.test.kops.k8s.local

SourceGroup name:masters.test.kops.k8s.local

SecurityGroupRule/all-master-to-node

SecurityGroup name:nodes.test.kops.k8s.local

SourceGroup name:masters.test.kops.k8s.local

SecurityGroupRule/all-node-to-node

SecurityGroup name:nodes.test.kops.k8s.local

SourceGroup name:nodes.test.kops.k8s.local

SecurityGroupRule/api-elb-egress

SecurityGroup name:api-elb.test.kops.k8s.local

CIDR 0.0.0.0/0

Egress true

SecurityGroupRule/https-api-elb-0.0.0.0/0

SecurityGroup name:api-elb.test.kops.k8s.local

CIDR 0.0.0.0/0

Protocol tcp

FromPort 443

ToPort 443

SecurityGroupRule/https-elb-to-master

SecurityGroup name:masters.test.kops.k8s.local

Protocol tcp

FromPort 443

ToPort 443

SourceGroup name:api-elb.test.kops.k8s.local

SecurityGroupRule/icmp-pmtu-api-elb-0.0.0.0/0

SecurityGroup name:api-elb.test.kops.k8s.local

CIDR 0.0.0.0/0

Protocol icmp

FromPort 3

ToPort 4

SecurityGroupRule/master-egress

SecurityGroup name:masters.test.kops.k8s.local

CIDR 0.0.0.0/0

Egress true

SecurityGroupRule/node-egress

SecurityGroup name:nodes.test.kops.k8s.local

CIDR 0.0.0.0/0

Egress true

SecurityGroupRule/node-to-master-tcp-1-2379

SecurityGroup name:masters.test.kops.k8s.local

Protocol tcp

FromPort 1

ToPort 2379

SourceGroup name:nodes.test.kops.k8s.local

SecurityGroupRule/node-to-master-tcp-2382-4000

SecurityGroup name:masters.test.kops.k8s.local

Protocol tcp

FromPort 2382

ToPort 4000

SourceGroup name:nodes.test.kops.k8s.local

SecurityGroupRule/node-to-master-tcp-4003-65535

SecurityGroup name:masters.test.kops.k8s.local

Protocol tcp

FromPort 4003

ToPort 65535

SourceGroup name:nodes.test.kops.k8s.local

SecurityGroupRule/node-to-master-udp-1-65535

SecurityGroup name:masters.test.kops.k8s.local

Protocol udp

FromPort 1

ToPort 65535

SourceGroup name:nodes.test.kops.k8s.local

SecurityGroupRule/ssh-external-to-master-0.0.0.0/0

SecurityGroup name:masters.test.kops.k8s.local

CIDR 0.0.0.0/0

Protocol tcp

FromPort 22

ToPort 22

SecurityGroupRule/ssh-external-to-node-0.0.0.0/0

SecurityGroup name:nodes.test.kops.k8s.local

CIDR 0.0.0.0/0

Protocol tcp

FromPort 22

ToPort 22

Subnet/us-east-1a.test.kops.k8s.local

ShortName us-east-1a

VPC name:test.kops.k8s.local

AvailabilityZone us-east-1a

CIDR 172.20.32.0/19

Shared false

Tags {SubnetType: Public, kubernetes.io/role/elb: 1, Name: us-east-1a.test.kops.k8s.local, KubernetesCluster: test.kops.k8s.local, kubernetes.io/cluster/test.kops.k8s.local: owned}

VPC/test.kops.k8s.local

CIDR 172.20.0.0/16

EnableDNSHostnames true

EnableDNSSupport true

Shared false

Tags {Name: test.kops.k8s.local, KubernetesCluster: test.kops.k8s.local, kubernetes.io/cluster/test.kops.k8s.local: owned}

VPCDHCPOptionsAssociation/test.kops.k8s.local

VPC name:test.kops.k8s.local

DHCPOptions name:test.kops.k8s.local

Must specify --yes to apply changes

Run the actual create

Verify the output and if you see all details are valid and correct, then append the same with –yes.

$ kops update cluster test.kops.k8s.local --yes

*********************************************************************************

A new kubernetes version is available: 1.14.10

Upgrading is recommended (try kops upgrade cluster)

More information: https://github.com/kubernetes/kops/blob/master/permalinks/upgrade_k8s.md#1.14.10

*********************************************************************************

I0614 23:20:04.962132 10205 apply_cluster.go:559] Gossip DNS: skipping DNS validation

I0614 23:20:06.433674 10205 executor.go:103] Tasks: 0 done / 90 total; 42 can run

I0614 23:20:08.101099 10205 vfs_castore.go:729] Issuing new certificate: "ca"

I0614 23:20:08.106221 10205 vfs_castore.go:729] Issuing new certificate: "etcd-clients-ca"

I0614 23:20:08.213700 10205 vfs_castore.go:729] Issuing new certificate: "etcd-peers-ca-main"

I0614 23:20:08.244157 10205 vfs_castore.go:729] Issuing new certificate: "etcd-manager-ca-main"

I0614 23:20:08.259931 10205 vfs_castore.go:729] Issuing new certificate: "etcd-peers-ca-events"

I0614 23:20:08.500961 10205 vfs_castore.go:729] Issuing new certificate: "etcd-manager-ca-events"

I0614 23:20:08.533954 10205 vfs_castore.go:729] Issuing new certificate: "apiserver-aggregator-ca"

I0614 23:20:09.840000 10205 executor.go:103] Tasks: 42 done / 90 total; 24 can run

I0614 23:20:11.083819 10205 vfs_castore.go:729] Issuing new certificate: "kubelet"

I0614 23:20:11.125938 10205 vfs_castore.go:729] Issuing new certificate: "apiserver-proxy-client"

I0614 23:20:11.133017 10205 vfs_castore.go:729] Issuing new certificate: "kube-proxy"

I0614 23:20:11.320722 10205 vfs_castore.go:729] Issuing new certificate: "apiserver-aggregator"

I0614 23:20:11.397990 10205 vfs_castore.go:729] Issuing new certificate: "kube-scheduler"

I0614 23:20:11.487591 10205 vfs_castore.go:729] Issuing new certificate: "kube-controller-manager"

I0614 23:20:11.763515 10205 vfs_castore.go:729] Issuing new certificate: "kubecfg"

I0614 23:20:11.779445 10205 vfs_castore.go:729] Issuing new certificate: "kubelet-api"

I0614 23:20:12.102637 10205 vfs_castore.go:729] Issuing new certificate: "kops"

I0614 23:20:13.021088 10205 executor.go:103] Tasks: 66 done / 90 total; 20 can run

I0614 23:20:14.015579 10205 launchconfiguration.go:364] waiting for IAM instance profile "nodes.test.kops.k8s.local" to be ready

I0614 23:20:25.689729 10205 executor.go:103] Tasks: 86 done / 90 total; 3 can run

I0614 23:20:27.477556 10205 vfs_castore.go:729] Issuing new certificate: "master"

I0614 23:20:28.549187 10205 executor.go:103] Tasks: 89 done / 90 total; 1 can run

W0614 23:20:28.983938 10205 executor.go:130] error running task "LoadBalancerAttachment/api-master-us-east-1a" (9m59s remaining to succeed): error attaching autoscaling group to ELB: ValidationError: Provided Load Balancers may not be valid. Please ensure they exist and try again.

status code: 400, request id: 589145f3-8f1f-4741-8d90-e447c5d7062f

I0614 23:20:28.984004 10205 executor.go:145] No progress made, sleeping before retrying 1 failed task(s)

I0614 23:20:38.989047 10205 executor.go:103] Tasks: 89 done / 90 total; 1 can run

I0614 23:20:39.582043 10205 executor.go:103] Tasks: 90 done / 90 total; 0 can run

I0614 23:20:40.071257 10205 update_cluster.go:294] Exporting kubecfg for cluster

kops has set your kubectl context to test.kops.k8s.local

Cluster changes have been applied to the cloud.

$ kops get cluster

NAME CLOUD ZONES

test.kops.k8s.local aws us-east-1a

$ Verify that the kOps cluster

Now, verify if the kops cluster has been created.

$ kops get cluster

NAME CLOUD ZONES

test.kops.k8s.local aws us-east-1aValidate the kOps cluster

You can validate the newly created kops cluster. The validate command validates the following components:

- All control plane nodes are running and have “Ready” status.

- All worker nodes are running and have “Ready” status.

- All control plane nodes have the expected pods.

- All pods with a critical priority are running and have “Ready” status.

$ kops validate cluster

Using cluster from kubectl context: test.kops.k8s.local

Validating cluster test.kops.k8s.local

INSTANCE GROUPS

NAME ROLE MACHINETYPE MIN MAX SUBNETS

master-us-east-1a Master t2.micro 1 1 us-east-1a

nodes Node t2.micro 2 2 us-east-1a

NODE STATUS

NAME ROLE READY

ip-172-20-46-172.ec2.internal master True

ip-172-20-51-54.ec2.internal node True

ip-172-20-53-53.ec2.internal node True

Your cluster test.kops.k8s.local is readyVerify kOps Instance Group

The InstanceGroup resource represents a group of similar machines typically provisioned in the same availability zone. On AWS, instance groups map directly to an autoscaling group.

You can find the complete list of keys on the InstanceGroup reference page.

$ kops get ig

Using cluster from kubectl context: test.kops.k8s.local

NAME ROLE MACHINETYPE MIN MAX ZONES

master-us-east-1a Master t2.micro 1 1 us-east-1a

nodes Node t2.micro 2 2 us-east-1aVerify Kubernetes Nodes

Kubernetes runs your workload by placing containers into Pods to run on Nodes. Depending on the cluster, a node may be a virtual or physical machine.

$ kubectl get nodes

NAME STATUS ROLES AGE VERSION

ip-172-20-46-172.ec2.internal Ready master 32m v1.14.0

ip-172-20-51-54.ec2.internal Ready node 31m v1.14.0

ip-172-20-53-53.ec2.internal Ready node 31m v1.14.0Verify Kubernetes Namespaces

Kubernetes supports multiple virtual clusters backed by the same physical cluster. These virtual clusters are called namespaces.

$ kubectl get ns

NAME STATUS AGE

default Active 33m

kube-node-lease Active 33m

kube-public Active 33m

kube-system Active 33mVerify all the Kubernetes Pods

Pods are the smallest deployable units of computing that you can create and manage in Kubernetes.

A Pod is a group of one or more containers with shared storage and network resources and a specification for how to run the containers.

$ kubectl get pods --all-namespaces

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-system dns-controller-7f9457558d-kh9bp 1/1 Running 0 9m47s

kube-system etcd-manager-events-ip-172-20-46-172.ec2.internal 1/1 Running 0 8m27s

kube-system etcd-manager-main-ip-172-20-46-172.ec2.internal 1/1 Running 0 8m57s

kube-system kube-apiserver-ip-172-20-46-172.ec2.internal 1/1 Running 3 9m36s

kube-system kube-controller-manager-ip-172-20-46-172.ec2.internal 1/1 Running 0 8m35s

kube-system kube-dns-66d58c65d5-59q6n 3/3 Running 0 9m13s

kube-system kube-dns-66d58c65d5-87f62 3/3 Running 0 10m

kube-system kube-dns-autoscaler-6567f59ccb-4vqst 1/1 Running 0 10m

kube-system kube-proxy-ip-172-20-46-172.ec2.internal 1/1 Running 0 8m32s

kube-system kube-proxy-ip-172-20-51-54.ec2.internal 1/1 Running 0 9m6s

kube-system kube-proxy-ip-172-20-53-53.ec2.internal 1/1 Running 0 9m13s

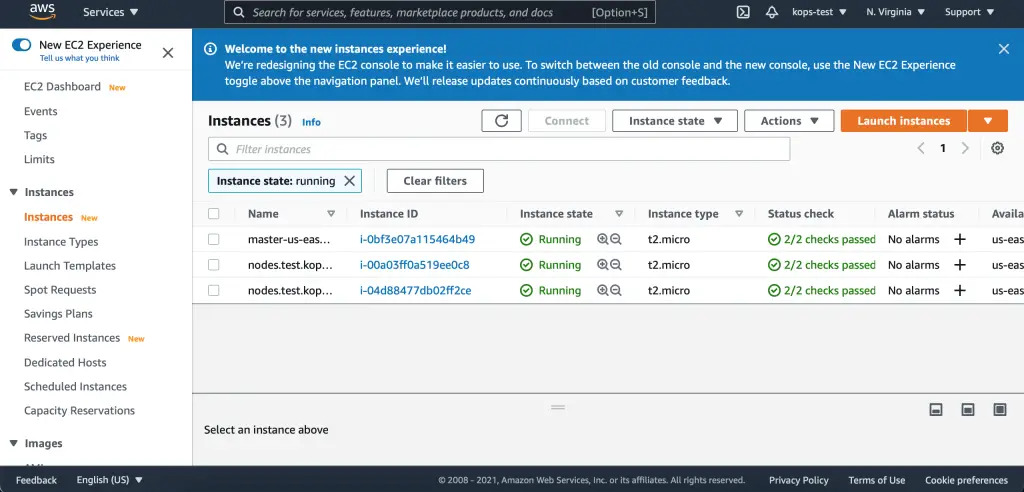

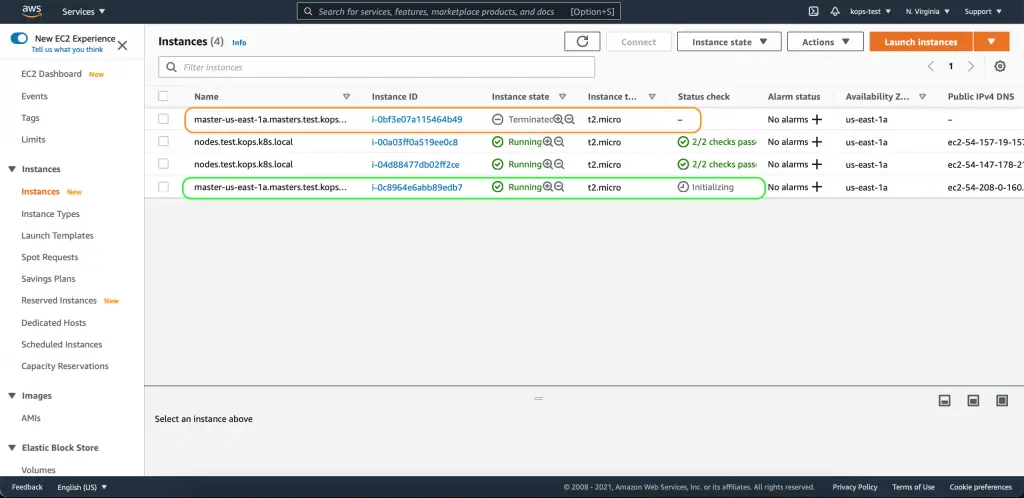

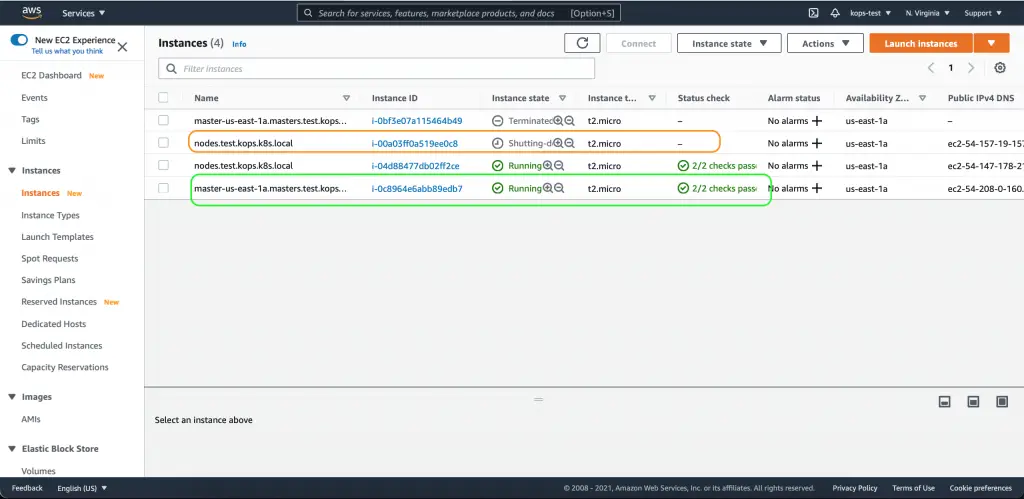

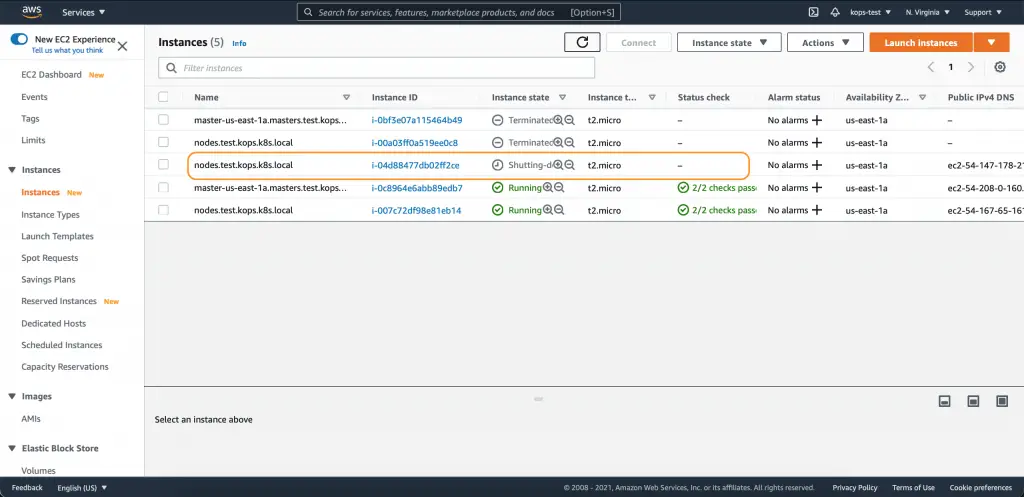

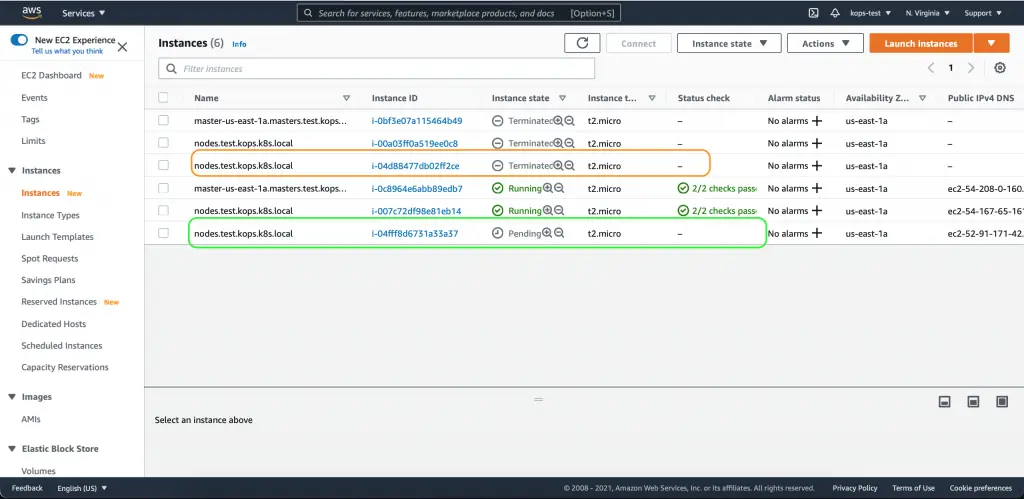

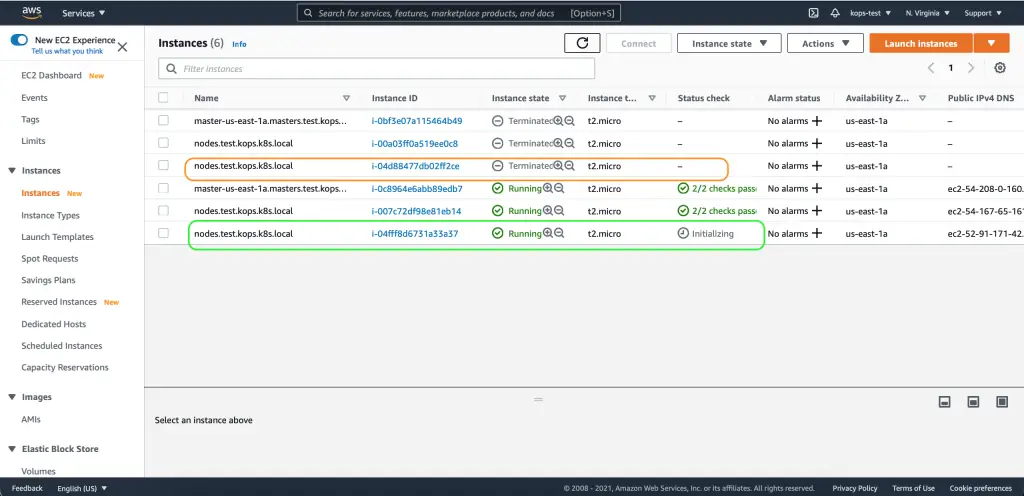

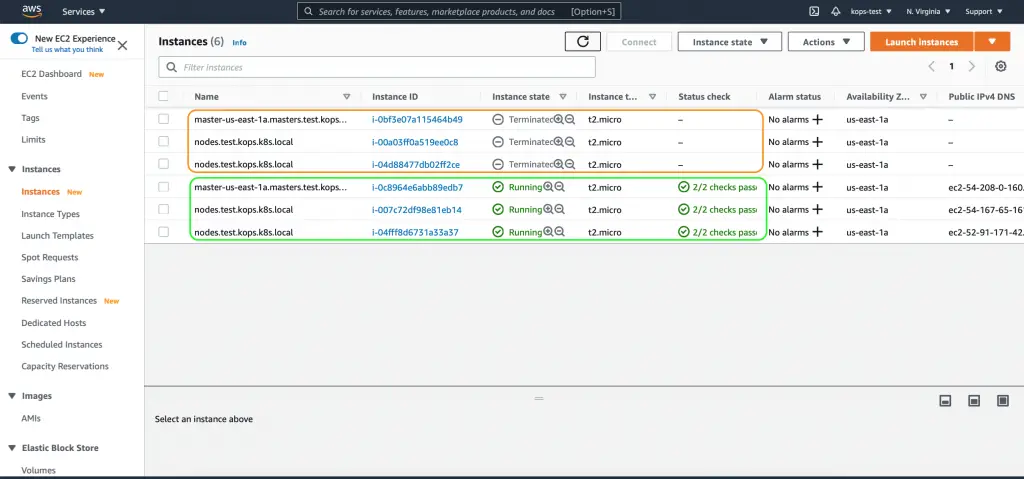

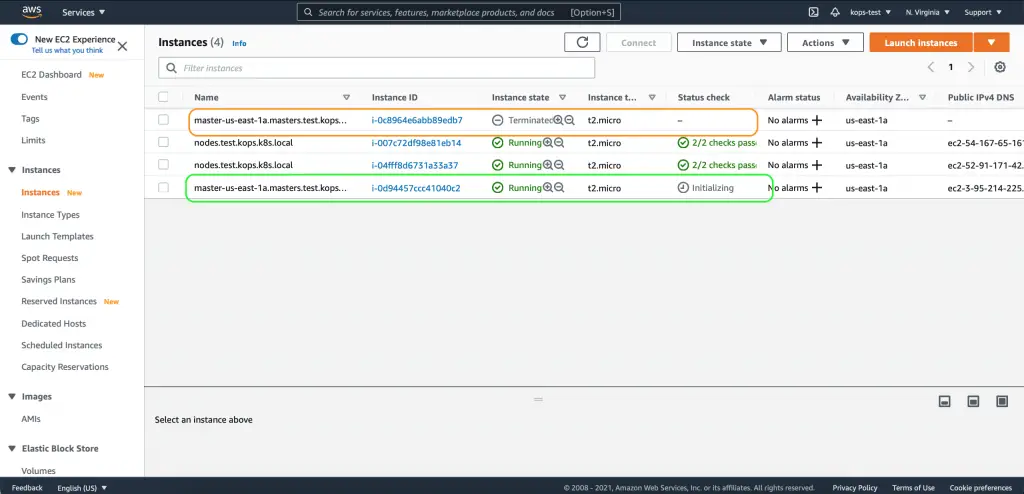

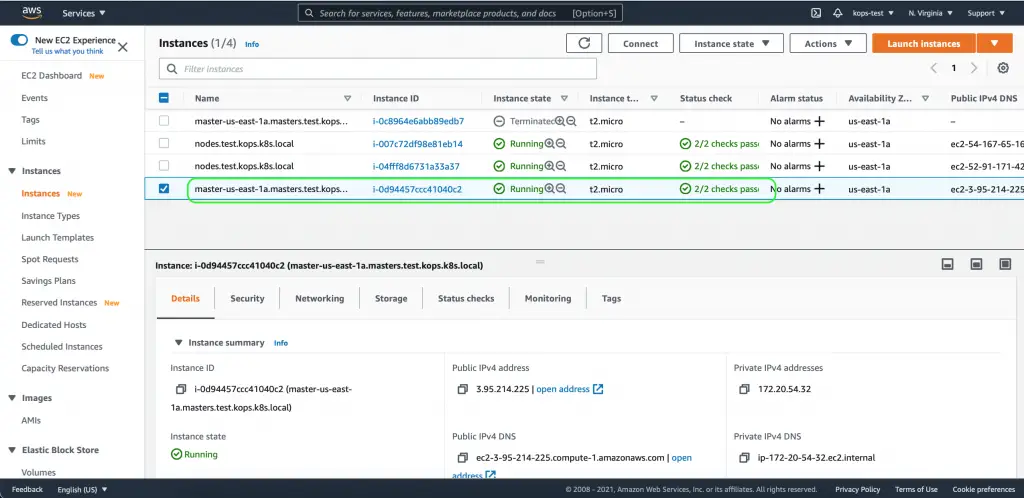

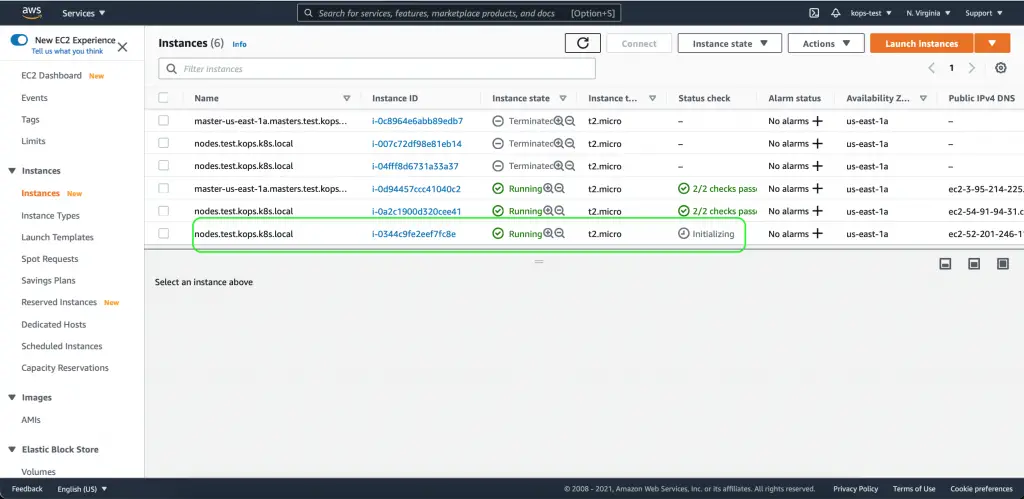

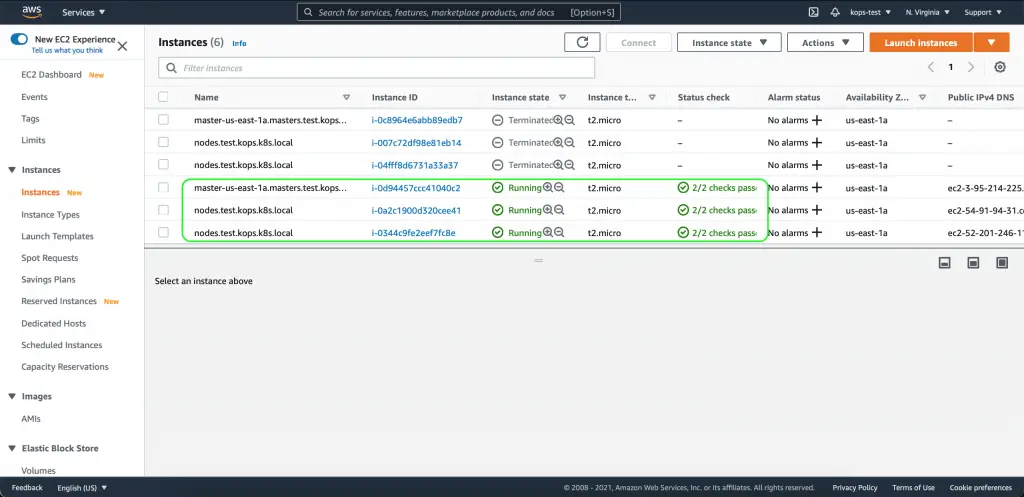

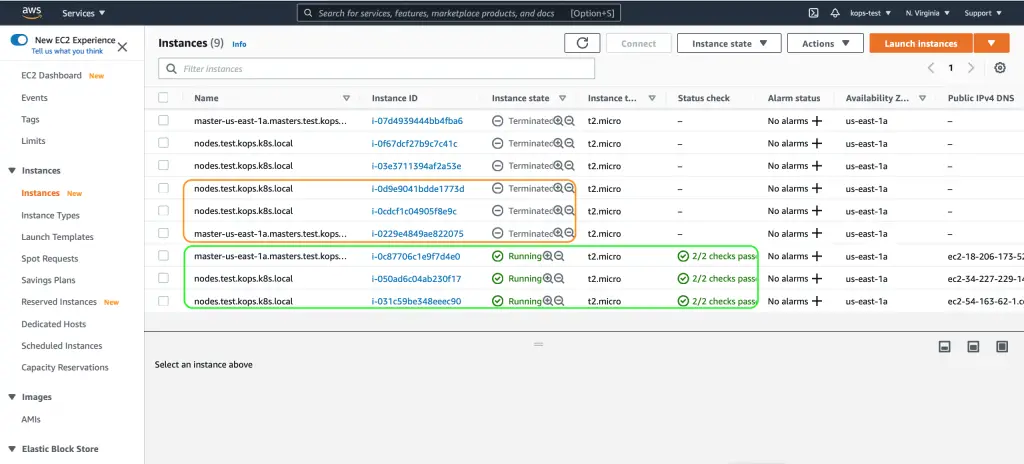

kube-system kube-scheduler-ip-172-20-46-172.ec2.internal 1/1 Running 0 8m37sVerify the instances on AWS Console

Now, login into the AWS Console and verify the newly created cluster

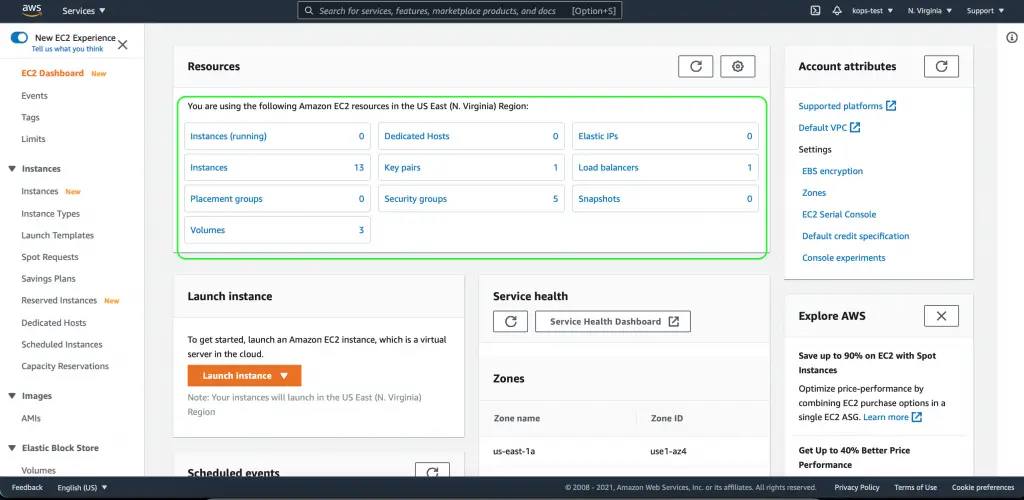

Verify all the AWS Resources created by kOps create command

Just browse to EC2 Dashboard, where you will find all the resources created by kops

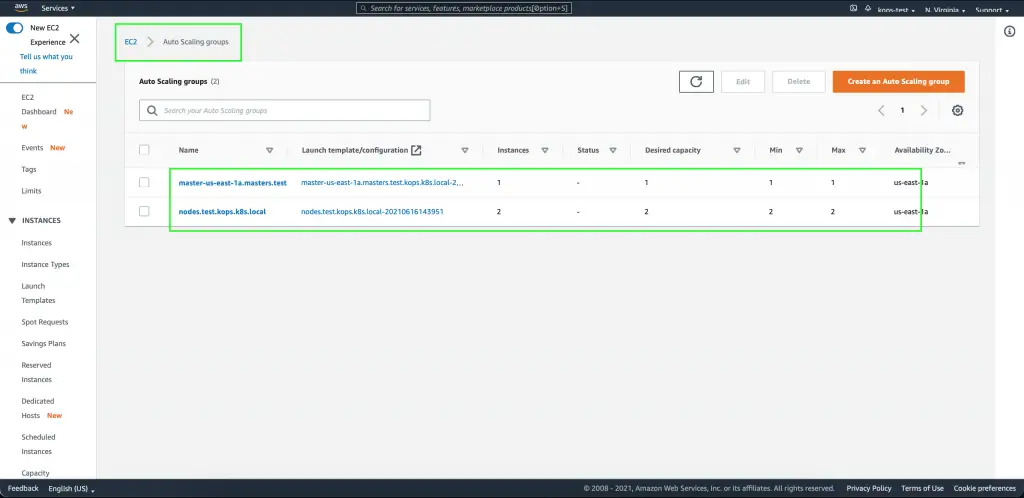

Verify the AWS Auto-Scaling groups created by kOps to manage the instances

An Auto Scaling group contains a collection of Amazon EC2 instances treated as a logical grouping for automatic scaling and management purposes.

How to Upgrade the kOps Cluster

kOps upgrade is a seamless and straightforward process. The exact versions supported are defined at github.com/kubernetes/kops/blob/master/channels/stable.

You might have created a kOps cluster a while ago and now would like to upgrade to the latest version of Kubernetes or any recommended version. Kops supports rolling cluster upgrades where the master and worker nodes are upgraded.

Upgrading to a specific version

Edit the Kubernetes version by editing the kOps cluster. Let’s update it to 1.14.6

$ kops edit cluster

Using cluster from kubectl context: test.kops.k8s.local

# Please edit the object below. Lines beginning with a '#' will be ignored,

# and an empty file will abort the edit. If an error occurs while saving this file will be

# reopened with the relevant failures.

#

apiVersion: kops/v1alpha2

kind: Cluster

metadata:

creationTimestamp: 2021-06-14T22:16:51Z

generation: 1

name: test.kops.k8s.local

spec:

api:

loadBalancer:

type: Public

authorization:

rbac: {}

channel: stable

cloudProvider: aws

configBase: s3://my-kops-test/test.kops.k8s.local

etcdClusters:

- cpuRequest: 200m

etcdMembers:

- instanceGroup: master-us-east-1a

name: a

memoryRequest: 100Mi

name: main

- cpuRequest: 100m

etcdMembers:

- instanceGroup: master-us-east-1a

name: a

memoryRequest: 100Mi

name: events

iam:

allowContainerRegistry: true

legacy: false

kubelet:

anonymousAuth: false

kubernetesApiAccess:

- 0.0.0.0/0

kubernetesVersion: 1.14.6

masterInternalName: api.internal.test.kops.k8s.local

masterPublicName: api.test.kops.k8s.local

networkCIDR: 172.20.0.0/16

networking:

kubenet: {}

nonMasqueradeCIDR: 100.64.0.0/10

"/var/folders/jn/qmn85hvx6js8dthzxgw1wg2r0000gn/T/kops-edit-tkr6kyaml" 58L, 1347CRun the kOps Update

Now, let’s move forward and run the kops update command

$ kops update cluster

Using cluster from kubectl context: test.kops.k8s.local

*********************************************************************************

A new kubernetes version is available: 1.14.10

Upgrading is recommended (try kops upgrade cluster)

More information: https://github.com/kubernetes/kops/blob/master/permalinks/upgrade_k8s.md#1.14.10

*********************************************************************************

I0615 00:09:43.531540 12833 apply_cluster.go:559] Gossip DNS: skipping DNS validation

I0615 00:09:43.576266 12833 executor.go:103] Tasks: 0 done / 90 total; 42 can run

I0615 00:09:44.510670 12833 executor.go:103] Tasks: 42 done / 90 total; 24 can run

I0615 00:09:45.279787 12833 executor.go:103] Tasks: 66 done / 90 total; 20 can run

I0615 00:09:46.446920 12833 executor.go:103] Tasks: 86 done / 90 total; 3 can run

I0615 00:09:46.958026 12833 executor.go:103] Tasks: 89 done / 90 total; 1 can run

I0615 00:09:47.135427 12833 executor.go:103] Tasks: 90 done / 90 total; 0 can run

Will modify resources:

LaunchConfiguration/master-us-east-1a.masters.test.kops.k8s.local

UserData

...

etcdServersOverrides:

- /events#http://127.0.0.1:4002

+ image: k8s.gcr.io/kube-apiserver:v1.14.6

- image: k8s.gcr.io/kube-apiserver:v1.14.0

insecureBindAddress: 127.0.0.1

insecurePort: 8080

...

clusterName: test.kops.k8s.local

configureCloudRoutes: true

+ image: k8s.gcr.io/kube-controller-manager:v1.14.6

- image: k8s.gcr.io/kube-controller-manager:v1.14.0

leaderElection:

leaderElect: true

...

cpuRequest: 100m

hostnameOverride: '@aws'

+ image: k8s.gcr.io/kube-proxy:v1.14.6

- image: k8s.gcr.io/kube-proxy:v1.14.0

logLevel: 2

kubeScheduler:

+ image: k8s.gcr.io/kube-scheduler:v1.14.6

- image: k8s.gcr.io/kube-scheduler:v1.14.0

leaderElection:

leaderElect: true

...

cat > kube_env.yaml << '__EOF_KUBE_ENV'

Assets:

+ - b5022066bdb4833407bcab2e636bb165a9ee7a95@https://storage.googleapis.com/kubernetes-release/release/v1.14.6/bin/linux/amd64/kubelet

- - c3b736fd0f003765c12d99f2c995a8369e6241f4@https://storage.googleapis.com/kubernetes-release/release/v1.14.0/bin/linux/amd64/kubelet

+ - 8a46184b3dd30bcc617da7787bc5971dc6a8233c@https://storage.googleapis.com/kubernetes-release/release/v1.14.6/bin/linux/amd64/kubectl

- - 7e3a3ea663153f900cbd52900a39c91fa9f334be@https://storage.googleapis.com/kubernetes-release/release/v1.14.0/bin/linux/amd64/kubectl

- 52e9d2de8a5f927307d9397308735658ee44ab8d@https://storage.googleapis.com/kubernetes-release/network-plugins/cni-plugins-amd64-v0.7.5.tgz

- ac028310c02614750331ec81abf2ef4bae57492c@https://github.com/kubernetes/kops/releases/download/1.14.0/linux-amd64-utils.tar.gz,https://kubeupv2.s3.amazonaws.com/kops/1.14.0/linux/amd64/utils.tar.gz

...

LaunchConfiguration/nodes.test.kops.k8s.local

UserData

...

cpuRequest: 100m

hostnameOverride: '@aws'

+ image: k8s.gcr.io/kube-proxy:v1.14.6

- image: k8s.gcr.io/kube-proxy:v1.14.0

logLevel: 2

kubelet:

...

cat > kube_env.yaml << '__EOF_KUBE_ENV'

Assets:

+ - b5022066bdb4833407bcab2e636bb165a9ee7a95@https://storage.googleapis.com/kubernetes-release/release/v1.14.6/bin/linux/amd64/kubelet

- - c3b736fd0f003765c12d99f2c995a8369e6241f4@https://storage.googleapis.com/kubernetes-release/release/v1.14.0/bin/linux/amd64/kubelet

+ - 8a46184b3dd30bcc617da7787bc5971dc6a8233c@https://storage.googleapis.com/kubernetes-release/release/v1.14.6/bin/linux/amd64/kubectl

- - 7e3a3ea663153f900cbd52900a39c91fa9f334be@https://storage.googleapis.com/kubernetes-release/release/v1.14.0/bin/linux/amd64/kubectl

- 52e9d2de8a5f927307d9397308735658ee44ab8d@https://storage.googleapis.com/kubernetes-release/network-plugins/cni-plugins-amd64-v0.7.5.tgz

- ac028310c02614750331ec81abf2ef4bae57492c@https://github.com/kubernetes/kops/releases/download/1.14.0/linux-amd64-utils.tar.gz,https://kubeupv2.s3.amazonaws.com/kops/1.14.0/linux/amd64/utils.tar.gz

...

Must specify --yes to apply changesRun the actual update

Verify the output and if you see all details are valid and correct, then append the same with –yes

$ kops update cluster --yes

Using cluster from kubectl context: test.kops.k8s.local

*********************************************************************************

A new kubernetes version is available: 1.14.10

Upgrading is recommended (try kops upgrade cluster)

More information: https://github.com/kubernetes/kops/blob/master/permalinks/upgrade_k8s.md#1.14.10

*********************************************************************************

I0615 00:11:13.491526 12842 apply_cluster.go:559] Gossip DNS: skipping DNS validation

I0615 00:11:14.781056 12842 executor.go:103] Tasks: 0 done / 90 total; 42 can run

I0615 00:11:16.518367 12842 executor.go:103] Tasks: 42 done / 90 total; 24 can run

I0615 00:11:17.323584 12842 executor.go:103] Tasks: 66 done / 90 total; 20 can run

I0615 00:11:19.309725 12842 executor.go:103] Tasks: 86 done / 90 total; 3 can run

I0615 00:11:19.846077 12842 executor.go:103] Tasks: 89 done / 90 total; 1 can run

I0615 00:11:20.039809 12842 executor.go:103] Tasks: 90 done / 90 total; 0 can run

I0615 00:11:20.331956 12842 update_cluster.go:294] Exporting kubecfg for cluster

kops has set your kubectl context to test.kops.k8s.local

Cluster changes have been applied to the cloud.

Changes may require instances to restart: kops rolling-update clusterRun the Rolling update

Lastly, perform a rolling update for all cluster nodes using the kops rolling-update command

$ kops rolling-update cluster

Using cluster from kubectl context: test.kops.k8s.local

NAME STATUS NEEDUPDATE READY MIN MAX NODES

master-us-east-1a NeedsUpdate 1 0 1 1 1

nodes NeedsUpdate 2 0 2 2 2

Must specify --yes to rolling-update.Run the actual Rolling update

Using --yes updates all nodes in the cluster, first master and then worker. There is a 5-minute delay between restarting master nodes and a 2-minute delay between restarting nodes. These values can be altered using --master-interval and --node-interval options, respectively. –v will provide number for the log level verbosity.

I have used detailed level logging, hence cropping the output.

$ kops rolling-update cluster --master-interval=1m --node-interval=1m --yes -v 10

I0615 00:12:50.627873 12857 loader.go:359] Config loaded from file /Users/ravi/.kube/config

Using cluster from kubectl context: test.kops.k8s.local

I0615 00:12:50.628180 12857 factory.go:68] state store s3://my-kops-test

I0615 00:12:50.628466 12857 s3context.go:338] GOOS="darwin", assuming not running on EC2

I0615 00:12:50.628506 12857 s3context.go:170] defaulting region to "us-east-1"

I0615 00:12:51.220660 12857 s3context.go:210] found bucket in region "us-east-1"

I0615 00:12:51.220713 12857 s3fs.go:220] Reading file "s3://my-kops-test/test.kops.k8s.local/config"

I0615 00:12:51.706468 12857 loader.go:359] Config loaded from file /Users/ravi/.kube/config

I0615 00:12:51.708672 12857 round_trippers.go:419] curl -k -v -XGET -H "Accept: application/json, */*" -H "User-Agent: kops/v0.0.0 (darwin/amd64) kubernetes/$Format" -H "Authorization: Basic YWRtaW46clh5bFBSTXp3eVVpaElXRlNWRDZ2QThwVTdzUzlPS1A=" 'https://api-test-kops-k8s-local-11nu02-1704966992.us-east-1.elb.amazonaws.com/api/v1/nodes'

I0615 00:12:52.164525 12857 round_trippers.go:438] GET https://api-test-kops-k8s-local-11nu02-1704966992.us-east-1.elb.amazonaws.com/api/v1/nodes 200 OK in 455 milliseconds

I0615 00:12:52.164571 12857 round_trippers.go:444] Response Headers:

I0615 00:12:52.164582 12857 round_trippers.go:447] Content-Type: application/json

I0615 00:12:52.164590 12857 round_trippers.go:447] Date: Mon, 14 Jun 2021 23:12:53 GMT

I0615 00:12:52.262166 12857 request.go:942] Response Body: {"kind":"NodeList","apiVersion":"v1","metadata":{"selfLink":"/api/v1/nodes","resourceVersion":"4436"},"items":[{"metadata":{"name":"ip-172-20-46-172.ec2.internal","selfLink":"/api/v1/nodes/ip-172-20-46-172.ec2.internal","uid":"a72ea4c8-cd5f-11eb-8fde-0ab56e2276cf","resourceVersion":"4394","creationTimestamp":"2021-06-14T22:27:07Z","labels":{"beta.kubernetes.io/arch":"amd64","beta.kubernetes.io/instance-type":"t2.micro","beta.kubernetes.io/os":"linux","failure-domain.beta.kubernetes.io/region":"us-east-1","failure-domain.beta.kubernetes.io/zone":"us-east-1a","kops.k8s.io/instancegroup":"master-us-east-1a","kubernetes.io/arch":"amd64","kubernetes.io/hostname":"ip-172-20-46-172.ec2.internal","kubernetes.io/os":"linux","kubernetes.io/role":"master","node-role.kubernetes.io/master":""},"annotations":{"node.alpha.kubernetes.io/ttl":"0","volumes.kubernetes.io/controller-managed-attach-detach":"true"}},"spec":{"podCIDR":"100.96.0.0/24","providerID":"aws:///us-east-1a/i-0bf3e07a115464b49","taints":[{"key":"node-role.kubernetes.io/master","effect":"NoSchedule"}]},"status":{"capacity":{"attachable-volumes-aws-ebs":"39","cpu":"1","ephemeral-storage":"67096556Ki","hugepages-2Mi":"0","memory":"1006896Ki","pods":"110"},"allocatable":{"attachable-volumes-aws-ebs":"39","cpu":"1","ephemeral-storage":"61836185908","hugepages-2Mi":"0","memory":"904496Ki","pods":"110"},"conditions":[{"type":"NetworkUnavailable","status":"False","lastHeartbeatTime":"2021-06-14T22:27:18Z","lastTransitionTime":"2021-06-14T22:27:18Z","reason":"RouteCreated","message":"RouteController created a route"},{"type":"MemoryPressure","status":"False","lastHeartbeatTime":"2021-06-14T23:12:23Z"I0615 00:12:52.265993 12857 s3fs.go:257] Listing objects in S3 bucket "my-kops-test" with prefix "test.kops.k8s.local/instancegroup/"

I0615 00:12:52.406555 12857 s3fs.go:285] Listed files in s3://my-kops-test/test.kops.k8s.local/instancegroup: [s3://my-kops-test/test.kops.k8s.local/instancegroup/master-us-east-1a s3://my-kops-test/test.kops.k8s.local/instancegroup/nodes]

I0615 00:12:52.406626 12857 s3fs.go:220] Reading file "s3://my-kops-test/test.kops.k8s.local/instancegroup/master-us-east-1a"

I0615 00:12:52.518428 12857 s3fs.go:220] Reading file "s3://my-kops-test/test.kops.k8s.local/instancegroup/nodes"

I0615 00:12:52.630815 12857 aws_cloud.go:1229] Querying EC2 for all valid zones in region "us-east-1"

I0615 00:12:52.631226 12857 request_logger.go:45] AWS request: ec2/DescribeAvailabilityZones

I0615 00:12:53.278207 12857 aws_cloud.go:483] Listing all Autoscaling groups matching cluster tags

I0615 00:12:53.280428 12857 request_logger.go:45] AWS request: autoscaling/DescribeTags

I0615 00:12:53.827013 12857 request_logger.go:45] AWS request: autoscaling/DescribeAutoScalingGroups

NAME STATUS NEEDUPDATE READY MIN MAX NODES

master-us-east-1a NeedsUpdate 1 0 1 1 1

nodes NeedsUpdate 2 0 2 2 2

I0615 00:12:54.029632 12857 rollingupdatecluster.go:392] Rolling update with drain and validate enabled.

I0615 00:12:54.029708 12857 aws_cloud.go:1229] Querying EC2 for all valid zones in region "us-eas

I0615 00:13:06.250975 12857 round_trippers.go:438] GET https://api-test-kops-k8s-local-11nu02-1704966992.us-east-1.elb.amazonaws.com/api/v1/namespaces/kube-system/pods/dns-controller-7f9457558d-kh9bp 404 Not Found in 99 milliseconds

I0615 00:13:06.251032 12857 round_trippers.go:444] Response Headers:

I0615 00:13:06.251049 12857 round_trippers.go:447] Date: Mon, 14 Jun 2021 23:13:07 GMT

I0615 00:13:06.251062 12857 round_trippers.go:447] Content-Type: application/json

I0615 00:13:06.251075 12857 round_trippers.go:447] Content-Length: 230

I0615 00:13:06.251113 12857 request.go:942] Response Body: {"kind":"Status","apiVersion":"v1","metadata":{},"status":"Failure","message":"pods \"dns-controller-7f9457558d-kh9bp\" not found","reason":"NotFound","details":{"name":"dns-controller-7f9457558d-kh9bp","kind":"pods"},"code":404}

pod/dns-controller-7f9457558d-kh9bp evicted

node/ip-172-20-46-172.ec2.internal evicted

I0615 00:13:06.252104 12857 instancegroups.go:362] Waiting for 1m30s for pods to stabilize after draining.

I0615 00:14:36.251589 12857 instancegroups.go:185] deleting node "ip-172-20-46-172.ec2.internal" from kubernetes

I0615 00:14:36.251724 12857 request.go:942] Request Body: {"kind":"DeleteOptions","apiVersion":"v1"}

[{"type":"Initialized","status":"True","lastProbeTime":null,"lastTransitionTime":"2021-06-14T23:24:21Z"},{"type":"Ready","status":"True","lastProbeTime":null,"lastTransitionTime":"2021-06-14T23:24:30Z"},{"type":"ContainersReady","status":"True","lastProbeTime":null,"lastTransitionTime":"2021-06-14T23:24:30Z"},{"type":"PodScheduled","status":"True","lastProbeTime":null,"lastTransitionTime":"2021-06-14T23:24:21Z"}],"hostIP":"172.20.49.134","podIP":"172.20.49.134","startTime":"2021-06-14T23:24:21Z","containerStatuses":[{"name":"kube-proxy","state":{"running":{"startedAt":"2021-06-14T23:24:29Z"}},"lastState":{},"ready":true,"restartCount":0,"image":"k8s.gcr.io/kube-proxy:v1.14.6","imageID":"docker-pullable://k8s.gcr.io/kube-proxy@sha256:e5c364dc75d816132bebf2d84b35518f0661fdeae39c686d92f9e5f9a07e96b9","containerID":"docker://d90f17c1b644469a488a59248735a6ec1f0b6f74417c944f52fd7fee144da71a"}],"qosClass":"Burstable"}},{"metadata":{"name":"kube-scheduler-ip-172-20-32-79.ec2.internal","namespace":"kube-system","selfLink":"/api/v1/namespaces/kube-system/pods/kube-scheduler-ip-172-20-32-79.ec2.internal","uid":"0d1bcbf9-cd67-11eb-b5e6-0ae320a5d35f","resourceVersion":"4707","creationTimestamp":"2021-06-14T23:20:05Z","labels":{"k8s-app":"kube-scheduler"},"annotations":{"kubernetes.io/config.hash":"63db87bca3b80e37d19ee68847233ffa","kubernetes.io/config.mirror":"63db87bca3b80e37d19ee68847233ffa","kubernetes.io/config.seen":"2021-06-14T23:17:19.845893249Z","kubernetes.io/config.source":"file","scheduler.alpha.kubernetes.io/critical-pod":""}},"spec":{"volumes":[{"name":"varlibkubescheduler","hostPath":{"path":"/var/lib/kube-scheduler","type":""}},{"name":"logfile","hostPath":{"path":"/var/log/kube-scheduler.log","type":""}}],"containers":[{"name":"kube-scheduler","image":"k8s.gcr.io/kube-scheduler:v1.14.6","command":["/bin/sh","-c","mkfifo /tmp/pipe; (tee -a /var/log/kube-scheduler.log \u003c /tmp/pipe \u0026 ) ; exec /usr/local/bin/kube-scheduler --kubeconfig=/var/lib/kube-scheduler/kubeconfig --leader-elect=true --v=2 \u003e /tmp/pipe 2\u003e\u00261"],"resources":{"requests":{"cpu":"100m"}},"volumeMounts":[{"name":"varlibkubescheduler","readOnly":true,"mountPath":"/var/lib/kube-scheduler"},{"name":"logfile","mountPath":"/var/log/kube-scheduler.log"}],"livenessProbe":{"httpGet":{"path":"/healthz","port":10251,"host":"127.0.0.1","scheme":"HTTP"},"initialDelaySeconds":15,"timeoutSeconds":15,"periodSeconds":10,"successThreshold":1,"failureThreshold":3},"terminationMessagePath":"/dev/termination-log","terminationMessagePolicy":"File","imagePullPolicy":"IfNotPresent"}],"restartPolicy":"Always","terminationGracePeriodSeconds":30,"dnsPolicy":"ClusterFirst","nodeName":"ip-172-20-32-79.ec2.internal","hostNetwork":true,"securityContext":{},"schedulerName":"default-scheduler","tolerations":[{"key":"CriticalAddonsOnly","operator":"Exists"},{"operator":"Exists","effect":"NoExecute"}],"priorityClassName":"system-cluster-critical","priority":2000000000,"enableServiceLinks":true},"status":{"phase":"Running","conditions":[{"type":"Initialized","status":"True","lastProbeTime":null,"lastTransitionTime":"2021-06-14T23:17:20Z"},{"type":"Ready","status":"True","lastProbeTime":null,"lastTransitionTime":"2021-06-14T23:17:25Z"},{"type":"ContainersReady","status":"True","lastProbeTime":null,"lastTransitionTime":"2021-06-14T23:17:25Z"},{"type":"PodScheduled","status":"True","lastProbeTime":null,"lastTransitionTime":"2021-06-14T23:17:20Z"}],"hostIP":"172.20.32.79","podIP":"172.20.32.79","startTime":"2021-06-14T23:17:20Z","containerStatuses":[{"name":"kube-scheduler","state":{"running":{"startedAt":"2021-06-14T23:17:24Z"}},"lastState":{},"ready":true,"restartCount":0,"image":"k8s.gcr.io/kube-scheduler:v1.14.6","imageID":"docker-pullable://k8s.gcr.io/kube-scheduler@sha256:0147e498f115390c6276014c5ac038e1128ba1cc0d15d28c380ba5a8cab34851","containerID":"docker://a009d3e58686b077d807ef6f38d4017d0d83d7b3c5132545203fc97f648dd1e6"}],"qosClass":"Burstable"}}]}

I0615 00:30:06.632986 12857 instancegroups.go:280] Cluster validated.

I0615 00:30:06.634895 12857 rollingupdate.go:184] Rolling update completed for cluster "test.kops.k8s.local"!

You have successfully upgraded to Kubernetes 1.14.6 version!

Validate the cluster

***In case you get an API error, give 5-10 mins to make sure your k8s cluster is ready and then give a retry.

$ kops validate cluster

Using cluster from kubectl context: test.kops.k8s.local

Validating cluster test.kops.k8s.local

INSTANCE GROUPS

NAME ROLE MACHINETYPE MIN MAX SUBNETS

master-us-east-1a Master t2.micro 1 1 us-east-1a

nodes Node t2.micro 2 2 us-east-1a

NODE STATUS

NAME ROLE READY

ip-172-20-32-79.ec2.internal master True

ip-172-20-49-134.ec2.internal node True

ip-172-20-55-143.ec2.internal node True

Your cluster test.kops.k8s.local is readyVerify the Instance Groups

Verify the ig after the upgrade

$ kops get ig

Using cluster from kubectl context: test.kops.k8s.local

NAME ROLE MACHINETYPE MIN MAX ZONES

master-us-east-1a Master t2.micro 1 1 us-east-1a

nodes Node t2.micro 2 2 us-east-1aVerify the Kubernetes Namespace

Verify the namespace after the upgrade

$ kubectl get ns

NAME STATUS AGE

default Active 66m

kube-node-lease Active 66m

kube-public Active 66m

kube-system Active 66mVerify the Kubernetes Pods

And then verify all the pods in all the namespaces

$ kubectl get pods --all-namespaces

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-system dns-controller-7f9457558d-gd5dx 1/1 Running 0 19m

kube-system etcd-manager-events-ip-172-20-32-79.ec2.internal 1/1 Running 0 12m

kube-system etcd-manager-main-ip-172-20-32-79.ec2.internal 1/1 Running 0 12m

kube-system kube-apiserver-ip-172-20-32-79.ec2.internal 1/1 Running 2 12m

kube-system kube-controller-manager-ip-172-20-32-79.ec2.internal 1/1 Running 0 12m

kube-system kube-dns-66d58c65d5-c2nq5 3/3 Running 0 7m54s

kube-system kube-dns-66d58c65d5-w7wkp 3/3 Running 0 7m54s

kube-system kube-dns-autoscaler-6567f59ccb-6lvkl 1/1 Running 0 7m54s

kube-system kube-proxy-ip-172-20-32-79.ec2.internal 1/1 Running 0 12m

kube-system kube-proxy-ip-172-20-49-134.ec2.internal 1/1 Running 0 7m6s

kube-system kube-proxy-ip-172-20-55-143.ec2.internal 1/1 Running 0 111s

kube-system kube-scheduler-ip-172-20-32-79.ec2.internal 1/1 Running 0 12mVerify the Kubernetes Version

At last, verify the Kubernetes version through kubectl.

$ kubectl version --short

Client Version: v1.21.1

Server Version: v1.14.6

WARNING: version difference between client (1.21) and server (1.14) exceeds the supported minor version skew of +/-1Upgrading to latest version

In case you need to upgrade to the latest version equivalent to the kOps version, then just run the kops upgrade cluster command

$ kops upgrade cluster

Using cluster from kubectl context: test.kops.k8s.local

I0615 02:30:33.574356 13084 upgrade_cluster.go:216] Custom image (ami-0aeeebd8d2ab47354) has been provided for Instance Group "master-us-east-1a"; not updating image

I0615 02:30:33.574400 13084 upgrade_cluster.go:216] Custom image (ami-0aeeebd8d2ab47354) has been provided for Instance Group "nodes"; not updating image

ITEM PROPERTY OLD NEW

Cluster KubernetesVersion 1.14.0 1.14.10

Must specify --yes to perform upgradeRun the actual Upgrade

The --yes option immediately applies the changes

$ kops upgrade cluster --yes

Using cluster from kubectl context: test.kops.k8s.local

I0615 02:48:58.463342 13135 upgrade_cluster.go:216] Custom image (ami-0aeeebd8d2ab47354) has been provided for Instance Group "master-us-east-1a"; not updating image

I0615 02:48:58.463389 13135 upgrade_cluster.go:216] Custom image (ami-0aeeebd8d2ab47354) has been provided for Instance Group "nodes"; not updating image

ITEM PROPERTY OLD NEW

Cluster KubernetesVersion 1.14.6 1.14.10

Updates applied to configuration.

You can now apply these changes, using `kops update cluster test.kops.k8s.local`Run the update as instructed

Now, repeat the same process of kops update and kops rolling-update

$ kops update cluster test.kops.k8s.local

I0615 02:52:32.318609 13149 apply_cluster.go:559] Gossip DNS: skipping DNS validation

I0615 02:52:32.368509 13149 executor.go:103] Tasks: 0 done / 90 total; 42 can run

I0615 02:52:33.454417 13149 executor.go:103] Tasks: 42 done / 90 total; 24 can run

I0615 02:52:34.114966 13149 executor.go:103] Tasks: 66 done / 90 total; 20 can run

I0615 02:52:35.234213 13149 executor.go:103] Tasks: 86 done / 90 total; 3 can run

I0615 02:52:35.772587 13149 executor.go:103] Tasks: 89 done / 90 total; 1 can run

I0615 02:52:35.949366 13149 executor.go:103] Tasks: 90 done / 90 total; 0 can run

Will modify resources:

LaunchConfiguration/master-us-east-1a.masters.test.kops.k8s.local

UserData

...

etcdServersOverrides:

- /events#http://127.0.0.1:4002

+ image: k8s.gcr.io/kube-apiserver:v1.14.10

- image: k8s.gcr.io/kube-apiserver:v1.14.6

insecureBindAddress: 127.0.0.1

insecurePort: 8080

...

clusterName: test.kops.k8s.local

configureCloudRoutes: true

+ image: k8s.gcr.io/kube-controller-manager:v1.14.10

- image: k8s.gcr.io/kube-controller-manager:v1.14.6

leaderElection:

leaderElect: true

...

cpuRequest: 100m

hostnameOverride: '@aws'

+ image: k8s.gcr.io/kube-proxy:v1.14.10

- image: k8s.gcr.io/kube-proxy:v1.14.6

logLevel: 2

kubeScheduler:

+ image: k8s.gcr.io/kube-scheduler:v1.14.10

- image: k8s.gcr.io/kube-scheduler:v1.14.6

leaderElection:

leaderElect: true

...

cat > kube_env.yaml << '__EOF_KUBE_ENV'

Assets:

+ - a8a816148b6b9278da9ec5c0d5c3abfbf1d38a96@https://storage.googleapis.com/kubernetes-release/release/v1.14.10/bin/linux/amd64/kubelet

- - b5022066bdb4833407bcab2e636bb165a9ee7a95@https://storage.googleapis.com/kubernetes-release/release/v1.14.6/bin/linux/amd64/kubelet

+ - 4018bc5a9aacc45cadafe3f3f2bc817fa0bd4e19@https://storage.googleapis.com/kubernetes-release/release/v1.14.10/bin/linux/amd64/kubectl

- - 8a46184b3dd30bcc617da7787bc5971dc6a8233c@https://storage.googleapis.com/kubernetes-release/release/v1.14.6/bin/linux/amd64/kubectl

- 52e9d2de8a5f927307d9397308735658ee44ab8d@https://storage.googleapis.com/kubernetes-release/network-plugins/cni-plugins-amd64-v0.7.5.tgz

- ac028310c02614750331ec81abf2ef4bae57492c@https://github.com/kubernetes/kops/releases/download/1.14.0/linux-amd64-utils.tar.gz,https://kubeupv2.s3.amazonaws.com/kops/1.14.0/linux/amd64/utils.tar.gz

...

LaunchConfiguration/nodes.test.kops.k8s.local

UserData

...

cpuRequest: 100m

hostnameOverride: '@aws'

+ image: k8s.gcr.io/kube-proxy:v1.14.10

- image: k8s.gcr.io/kube-proxy:v1.14.6

logLevel: 2

kubelet:

...

cat > kube_env.yaml << '__EOF_KUBE_ENV'

Assets:

+ - a8a816148b6b9278da9ec5c0d5c3abfbf1d38a96@https://storage.googleapis.com/kubernetes-release/release/v1.14.10/bin/linux/amd64/kubelet

- - b5022066bdb4833407bcab2e636bb165a9ee7a95@https://storage.googleapis.com/kubernetes-release/release/v1.14.6/bin/linux/amd64/kubelet

+ - 4018bc5a9aacc45cadafe3f3f2bc817fa0bd4e19@https://storage.googleapis.com/kubernetes-release/release/v1.14.10/bin/linux/amd64/kubectl

- - 8a46184b3dd30bcc617da7787bc5971dc6a8233c@https://storage.googleapis.com/kubernetes-release/release/v1.14.6/bin/linux/amd64/kubectl

- 52e9d2de8a5f927307d9397308735658ee44ab8d@https://storage.googleapis.com/kubernetes-release/network-plugins/cni-plugins-amd64-v0.7.5.tgz

- ac028310c02614750331ec81abf2ef4bae57492c@https://github.com/kubernetes/kops/releases/download/1.14.0/linux-amd64-utils.tar.gz,https://kubeupv2.s3.amazonaws.com/kops/1.14.0/linux/amd64/utils.tar.gz

...

Must specify --yes to apply changes

Provide –yes to confirm the change

$ kops update cluster test.kops.k8s.local --yes

I0615 02:52:46.466624 13156 apply_cluster.go:559] Gossip DNS: skipping DNS validation

I0615 02:52:47.661334 13156 executor.go:103] Tasks: 0 done / 90 total; 42 can run

I0615 02:52:48.465652 13156 executor.go:103] Tasks: 42 done / 90 total; 24 can run

I0615 02:52:49.187862 13156 executor.go:103] Tasks: 66 done / 90 total; 20 can run

I0615 02:52:52.861734 13156 executor.go:103] Tasks: 86 done / 90 total; 3 can run

I0615 02:52:53.357615 13156 executor.go:103] Tasks: 89 done / 90 total; 1 can run

I0615 02:52:53.546861 13156 executor.go:103] Tasks: 90 done / 90 total; 0 can run

I0615 02:52:53.838062 13156 update_cluster.go:294] Exporting kubecfg for cluster

kops has set your kubectl context to test.kops.k8s.local

Cluster changes have been applied to the cloud.

Changes may require instances to restart: kops rolling-update clusterNow, proceed with the Rolling update.

$ kops rolling-update cluster

Using cluster from kubectl context: test.kops.k8s.local

NAME STATUS NEEDUPDATE READY MIN MAX NODES

master-us-east-1a NeedsUpdate 1 0 1 1 1

nodes NeedsUpdate 2 0 2 2 2

Must specify --yes to rolling-update.Just verify the status and provide –yes to proceed with the actual update

$ kops rolling-update cluster --yes

Using cluster from kubectl context: test.kops.k8s.local

NAME STATUS NEEDUPDATE READY MIN MAX NODES

master-us-east-1a NeedsUpdate 1 0 1 1 1

nodes NeedsUpdate 2 0 2 2 2

I0615 02:54:40.946025 13174 instancegroups.go:165] Draining the node: "ip-172-20-32-79.ec2.internal".

node/ip-172-20-32-79.ec2.internal cordoned

node/ip-172-20-32-79.ec2.internal cordoned

pod/dns-controller-7f9457558d-gd5dx evicted

node/ip-172-20-32-79.ec2.internal evicted

I0615 02:54:49.172896 13174 instancegroups.go:362] Waiting for 1m30s for pods to stabilize after draining.

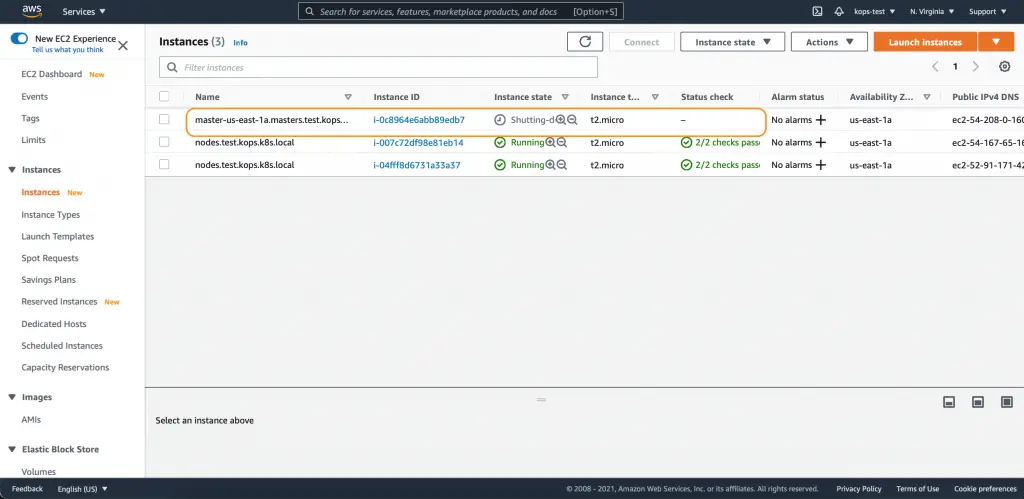

I0615 02:56:19.175659 13174 instancegroups.go:185] deleting node "ip-172-20-32-79.ec2.internal" from kubernetes

I0615 02:56:19.284069 13174 instancegroups.go:303] Stopping instance "i-0c8964e6abb89edb7", node "ip-172-20-32-79.ec2.internal", in group "master-us-east-1a.masters.test.kops.k8s.local" (this may take a while).

I0615 02:56:19.961070 13174 instancegroups.go:198] waiting for 5m0s after terminating instance

I0615 03:01:19.957043 13174 instancegroups.go:209] Validating the cluster.

I0615 03:01:20.896401 13174 instancegroups.go:270] Cluster did not validate, will try again in "30s" until duration "5m0s" expires: error listing nodes: Get https://api-test-kops-k8s-local-11nu02-1704966992.us-east-1.elb.amazonaws.com/api/v1/nodes: EOF.

I0615 03:01:52.649911 13174 instancegroups.go:277] Cluster did not pass validation, will try again in "30s" until duration "5m0s" expires: master "ip-172-20-54-32.ec2.internal" is not ready, kube-system pod "dns-controller-7f9457558d-24jqp" is pending, kube-system pod "kube-controller-manager-ip-172-20-54-32.ec2.internal" is pending.

I0615 03:02:22.150016 13174 instancegroups.go:280] Cluster validated.

I0615 03:02:23.021434 13174 instancegroups.go:165] Draining the node: "ip-172-20-49-134.ec2.internal".

node/ip-172-20-49-134.ec2.internal cordoned

node/ip-172-20-49-134.ec2.internal cordoned

pod/kube-dns-autoscaler-6567f59ccb-6lvkl evicted

pod/kube-dns-66d58c65d5-w7wkp evicted

pod/kube-dns-66d58c65d5-c2nq5 evicted

node/ip-172-20-49-134.ec2.internal evicted

I0615 03:03:30.305403 13174 instancegroups.go:362] Waiting for 1m30s for pods to stabilize after draining.

I0615 03:05:00.304085 13174 instancegroups.go:185] deleting node "ip-172-20-49-134.ec2.internal" from kubernetes

I0615 03:05:00.423180 13174 instancegroups.go:303] Stopping instance "i-007c72df98e81eb14", node "ip-172-20-49-134.ec2.internal", in group "nodes.test.kops.k8s.local" (this may take a while).

I0615 03:05:01.186652 13174 instancegroups.go:198] waiting for 4m0s after terminating instance

I0615 03:09:01.185349 13174 instancegroups.go:209] Validating the cluster.

I0615 03:09:04.058784 13174 instancegroups.go:280] Cluster validated.

I0615 03:09:04.058825 13174 instancegroups.go:165] Draining the node: "ip-172-20-55-143.ec2.internal".

node/ip-172-20-55-143.ec2.internal cordoned

node/ip-172-20-55-143.ec2.internal cordoned

pod/kube-dns-autoscaler-6567f59ccb-xhsnq evicted

pod/kube-dns-66d58c65d5-d2mtr evicted

pod/kube-dns-66d58c65d5-c9nf7 evicted

node/ip-172-20-55-143.ec2.internal evicted

I0615 03:09:37.175582 13174 instancegroups.go:362] Waiting for 1m30s for pods to stabilize after draining.

I0615 03:11:07.178359 13174 instancegroups.go:185] deleting node "ip-172-20-55-143.ec2.internal" from kubernetes

I0615 03:11:07.286188 13174 instancegroups.go:303] Stopping instance "i-04fff8d6731a33a37", node "ip-172-20-55-143.ec2.internal", in group "nodes.test.kops.k8s.local" (this may take a while).

I0615 03:11:08.091372 13174 instancegroups.go:198] waiting for 4m0s after terminating instance

I0615 03:15:08.090036 13174 instancegroups.go:209] Validating the cluster.

I0615 03:15:09.812015 13174 instancegroups.go:280] Cluster validated.

I0615 03:15:09.812084 13174 rollingupdate.go:184] Rolling update completed for cluster "test.kops.k8s.local"!

Validate the kOps Cluster

Validate the kops cluster after the latest upgrade

$ kops validate cluster

Using cluster from kubectl context: test.kops.k8s.local

Validating cluster test.kops.k8s.local

INSTANCE GROUPS

NAME ROLE MACHINETYPE MIN MAX SUBNETS

master-us-east-1a Master t2.micro 1 1 us-east-1a

nodes Node t2.micro 2 2 us-east-1a

NODE STATUS

NAME ROLE READY

ip-172-20-33-82.ec2.internal node True

ip-172-20-54-32.ec2.internal master True

ip-172-20-58-64.ec2.internal node True

Your cluster test.kops.k8s.local is readyVerify all the kubernetes Pods

Just verify all the pods in all the namespaces

$ kubectl get pods --all-namespaces

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-system dns-controller-7f9457558d-24jqp 1/1 Running 0 21m

kube-system etcd-manager-events-ip-172-20-54-32.ec2.internal 1/1 Running 0 14m

kube-system etcd-manager-main-ip-172-20-54-32.ec2.internal 1/1 Running 0 13m

kube-system kube-apiserver-ip-172-20-54-32.ec2.internal 1/1 Running 3 14m

kube-system kube-controller-manager-ip-172-20-54-32.ec2.internal 1/1 Running 0 14m

kube-system kube-dns-66d58c65d5-hklfw 3/3 Running 0 7m31s

kube-system kube-dns-66d58c65d5-rzk7r 3/3 Running 0 7m32s

kube-system kube-dns-autoscaler-6567f59ccb-ltd86 1/1 Running 0 7m31s

kube-system kube-proxy-ip-172-20-33-82.ec2.internal 1/1 Running 0 99s

kube-system kube-proxy-ip-172-20-54-32.ec2.internal 1/1 Running 0 14m

kube-system kube-proxy-ip-172-20-58-64.ec2.internal 1/1 Running 0 8m7s

kube-system kube-scheduler-ip-172-20-54-32.ec2.internal 1/1 Running 0 14mVerify the Kubernetes Version

At last, verify the Kubernetes version after the latest upgrade

$ kubectl version --short

Client Version: v1.21.1

Server Version: v1.14.10

WARNING: version difference between client (1.21) and server (1.14) exceeds the supported minor version skew of +/-1Now, if you need the Kubernetes latest version through auto-upgrade, download and install the latest version of kOps.

I ran this example using the kOps 1.14.0 version; hence the Kubernetes 1.14.10 version was upgraded through kOps.

Rollback to specific version

If you are getting errors with the upgrade and not sure if you have upgraded to the right version without referring to the release notes, Or you want to roll back to any specific version due to application compatibility, kOps provides an easy way for the same.

Just edit the Kubernetes version to desired one, here I am rolling back from 1.16.15 to 1.15.12

$ kops edit cluster

Using cluster from kubectl context: test.kops.k8s.localOnce you edit the cluster, just run the update command

$ kops update cluster test.kops.k8s.local --yes

*********************************************************************************

A new kops version is available: 1.20.0

Upgrading is recommended

More information: https://github.com/kubernetes/kops/blob/master/permalinks/upgrade_kops.md#1.20.0

*********************************************************************************

I0615 18:36:30.379491 13818 apply_cluster.go:556] Gossip DNS: skipping DNS validation

I0615 18:36:31.894300 13818 executor.go:103] Tasks: 0 done / 92 total; 44 can run

I0615 18:36:32.830976 13818 executor.go:103] Tasks: 44 done / 92 total; 24 can run

I0615 18:36:33.569770 13818 executor.go:103] Tasks: 68 done / 92 total; 20 can run

I0615 18:36:36.189701 13818 executor.go:103] Tasks: 88 done / 92 total; 3 can run

I0615 18:36:36.719430 13818 executor.go:103] Tasks: 91 done / 92 total; 1 can run

I0615 18:36:36.962661 13818 executor.go:103] Tasks: 92 done / 92 total; 0 can run

I0615 18:36:37.134989 13818 update_cluster.go:305] Exporting kubecfg for cluster

kops has set your kubectl context to test.kops.k8s.local

Cluster changes have been applied to the cloud.

Changes may require instances to restart: kops rolling-update cluster

And then rolling-update command

$ kops rolling-update cluster --yesIf you are getting an API error and the rolling-update cluster command fails, like below

$ kops rolling-update cluster --yes -v 5

Using cluster from kubectl context: test.kops.k8s.local

I0615 18:41:19.084496 13830 factory.go:68] state store s3://my-kops-test

I0615 18:41:19.084664 13830 s3context.go:338] GOOS="darwin", assuming not running on EC2

I0615 18:41:19.084680 13830 s3context.go:170] defaulting region to "us-east-1"

I0615 18:41:19.708794 13830 s3context.go:210] found bucket in region "us-east-1"

I0615 18:41:19.708839 13830 s3fs.go:285] Reading file "s3://my-kops-test/test.kops.k8s.local/config"

Unable to reach the kubernetes API.

Use --cloudonly to do a rolling-update without confirming progress with the k8s API

error listing nodes in cluster: Get https://api-test-kops-k8s-local-11nu02-1704966992.us-east-1.elb.amazonaws.com/api/v1/nodes: EOFThen, use the –cloudonly flag, which performs rolling update without confirming progress with k8s

** Please note that cloudonly means that rolling update does not call Kubernetes API and verify Kubernetes cluster status between the instance upgrade. Instead, it will call only cloudprovider APIs, for instance, AWS APIs, to do the upgrade. If you are using things with cloudonly flag, it’s not draining nodes either. In normal situations that k8s API is up and running, you should not use cloudonly flag with the rolling update.

$ kops rolling-update cluster --yes -v 5 --cloudonly

Using cluster from kubectl context: test.kops.k8s.local

I0615 18:41:28.797978 13837 factory.go:68] state store s3://my-kops-test

I0615 18:41:28.798178 13837 s3context.go:338] GOOS="darwin", assuming not running on EC2

I0615 18:41:28.798200 13837 s3context.go:170] defaulting region to "us-east-1"

I0615 18:41:29.361008 13837 s3context.go:210] found bucket in region "us-east-1"

I0615 18:41:29.361053 13837 s3fs.go:285] Reading file "s3://my-kops-test/test.kops.k8s.local/config"

I0615 18:41:29.840169 13837 s3fs.go:322] Listing objects in S3 bucket "my-kops-test" with prefix "test.kops.k8s.local/instancegroup/"

I0615 18:41:29.973245 13837 s3fs.go:285] Reading file "s3://my-kops-test/test.kops.k8s.local/instancegroup/master-us-east-1a"

I0615 18:41:30.080363 13837 s3fs.go:285] Reading file "s3://my-kops-test/test.kops.k8s.local/instancegroup/nodes"

I0615 18:41:30.191391 13837 aws_cloud.go:1248] Querying EC2 for all valid zones in region "us-east-1"

I0615 18:41:30.191574 13837 request_logger.go:45] AWS request: ec2/DescribeAvailabilityZones

I0615 18:41:31.238777 13837 aws_cloud.go:487] Listing all Autoscaling groups matching cluster tags

I0615 18:41:31.239009 13837 request_logger.go:45] AWS request: autoscaling/DescribeTags

I0615 18:41:31.762117 13837 request_logger.go:45] AWS request: autoscaling/DescribeAutoScalingGroups

NAME STATUS NEEDUPDATE READY MIN MAX

master-us-east-1a NeedsUpdate 1 0 1 1

nodes NeedsUpdate 2 0 2 2

I0615 18:41:31.949116 13837 rollingupdatecluster.go:394] Rolling update with drain and validate enabled.

I0615 18:41:31.949160 13837 instancegroups.go:129] Not validating cluster as validation is turned off via the cloud-only flag.

W0615 18:41:31.949179 13837 instancegroups.go:152] Not draining cluster nodes as 'cloudonly' flag is set.

I0615 18:41:31.949196 13837 instancegroups.go:304] Stopping instance "i-0229e4849ae822075", in group "master-us-east-1a.masters.test.kops.k8s.local" (this may take a while).

I0615 18:41:31.949331 13837 request_logger.go:45] AWS request: autoscaling/TerminateInstanceInAutoScalingGroup

I0615 18:41:32.218223 13837 instancegroups.go:189] waiting for 15s after terminating instance

W0615 18:41:47.218858 13837 instancegroups.go:197] Not validating cluster as cloudonly flag is set.

I0615 18:41:47.218969 13837 instancegroups.go:129] Not validating cluster as validation is turned off via the cloud-only flag.

W0615 18:41:47.218988 13837 instancegroups.go:152] Not draining cluster nodes as 'cloudonly' flag is set.

I0615 18:41:47.218998 13837 instancegroups.go:304] Stopping instance "i-0cdcf1c04905f8e9c", in group "nodes.test.kops.k8s.local" (this may take a while).

I0615 18:41:47.219274 13837 request_logger.go:45] AWS request: autoscaling/TerminateInstanceInAutoScalingGroup

I0615 18:41:47.819123 13837 instancegroups.go:189] waiting for 15s after terminating instance

W0615 18:42:02.820447 13837 instancegroups.go:197] Not validating cluster as cloudonly flag is set.

W0615 18:42:02.820523 13837 instancegroups.go:152] Not draining cluster nodes as 'cloudonly' flag is set.

I0615 18:42:02.820539 13837 instancegroups.go:304] Stopping instance "i-0d9e9041bdde1773d", in group "nodes.test.kops.k8s.local" (this may take a while).

I0615 18:42:02.820678 13837 request_logger.go:45] AWS request: autoscaling/TerminateInstanceInAutoScalingGroup

I0615 18:42:03.462790 13837 instancegroups.go:189] waiting for 15s after terminating instance

W0615 18:42:18.464102 13837 instancegroups.go:197] Not validating cluster as cloudonly flag is set.

I0615 18:42:18.464225 13837 rollingupdate.go:189] Rolling update completed for cluster "test.kops.k8s.local"!

Wait for some time, and then try to validate the cluster

$ kops validate cluster

Using cluster from kubectl context: test.kops.k8s.local

Validating cluster test.kops.k8s.local

INSTANCE GROUPS

NAME ROLE MACHINETYPE MIN MAX SUBNETS

master-us-east-1a Master t2.micro 1 1 us-east-1a

nodes Node t2.micro 2 2 us-east-1a

NODE STATUS

NAME ROLE READY

ip-172-20-47-15.ec2.internal node True

ip-172-20-55-240.ec2.internal node True

ip-172-20-57-81.ec2.internal master True

Your cluster test.kops.k8s.local is readyAnd verify Kubernetes all the pods

$ kubectl get pods --all-namespaces

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-system dns-controller-5ccc8d594b-t49n6 1/1 Running 0 2m10s

kube-system etcd-manager-events-ip-172-20-57-81.ec2.internal 1/1 Running 0 3m25s

kube-system etcd-manager-main-ip-172-20-57-81.ec2.internal 1/1 Running 0 3m26s

kube-system kube-apiserver-ip-172-20-57-81.ec2.internal 1/1 Running 2 2m26s

kube-system kube-controller-manager-ip-172-20-57-81.ec2.internal 1/1 Running 0 2m45s

kube-system kube-dns-684d554478-f7glt 3/3 Running 0 2m10s

kube-system kube-dns-684d554478-jjrmb 3/3 Running 0 2m10s

kube-system kube-dns-autoscaler-7f964b85c7-5k6rb 1/1 Running 0 2m10s

kube-system kube-proxy-ip-172-20-47-15.ec2.internal 1/1 Running 0 58s

kube-system kube-proxy-ip-172-20-55-240.ec2.internal 1/1 Running 0 93s

kube-system kube-proxy-ip-172-20-57-81.ec2.internal 1/1 Running 0 2m29s

kube-system kube-scheduler-ip-172-20-57-81.ec2.internal 1/1 Running 0 3m29sVerify the Kubernetes version through kubectl version

$ kubectl version --short

Client Version: v1.21.1

Server Version: v1.15.12Delete the kOps cluster

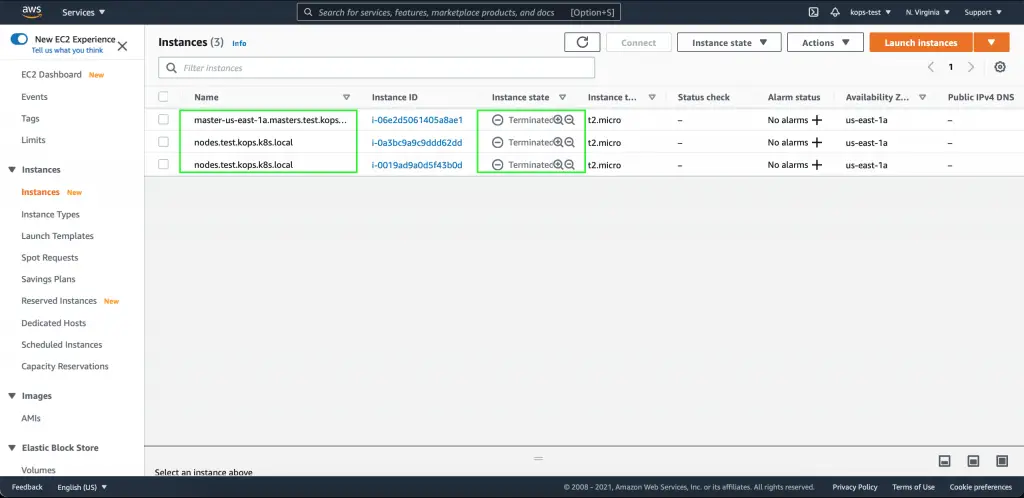

To delete the cluster, use, kops delete command. It deletes a Kubernetes cluster and all associated resources.

**Please be aware that you cannot retrieve it once the cluster is deleted.

$ kops delete cluster test.kops.k8s.local

TYPE NAME ID

autoscaling-config master-us-east-1a.masters.test.kops.k8s.local-20210616142614 master-us-east-1a.masters.test.kops.k8s.local-20210616142614

autoscaling-config master-us-east-1a.masters.test.kops.k8s.local-20210616143951 master-us-east-1a.masters.test.kops.k8s.local-20210616143951

autoscaling-config master-us-east-1a.masters.test.kops.k8s.local-20210616220957 master-us-east-1a.masters.test.kops.k8s.local-20210616220957

autoscaling-config nodes.test.kops.k8s.local-20210616142614 nodes.test.kops.k8s.local-20210616142614

autoscaling-config nodes.test.kops.k8s.local-20210616143951 nodes.test.kops.k8s.local-20210616143951

autoscaling-config nodes.test.kops.k8s.local-20210616220957 nodes.test.kops.k8s.local-20210616220957

autoscaling-group master-us-east-1a.masters.test.kops.k8s.local master-us-east-1a.masters.test.kops.k8s.local

autoscaling-group nodes.test.kops.k8s.local nodes.test.kops.k8s.local

dhcp-options test.kops.k8s.local dopt-00efd994a46fcf605

iam-instance-profile masters.test.kops.k8s.local masters.test.kops.k8s.local

iam-instance-profile nodes.test.kops.k8s.local nodes.test.kops.k8s.local

iam-role masters.test.kops.k8s.local masters.test.kops.k8s.local

iam-role nodes.test.kops.k8s.local nodes.test.kops.k8s.local

instance master-us-east-1a.masters.test.kops.k8s.local i-06e2d5061405a8ae1

instance nodes.test.kops.k8s.local i-0019ad9a0d5f43b0d

instance nodes.test.kops.k8s.local i-0a3bc9a9c9ddd62dd

internet-gateway test.kops.k8s.local igw-0cd3dd00659e09e68

keypair kubernetes.test.kops.k8s.local-83:96:15:36:d5:27:79:ea:42:14:0c:65:58:56:a9:c9 kubernetes.test.kops.k8s.local-83:96:15:36:d5:27:79:ea:42:14:0c:65:58:56:a9:c9

load-balancer api.test.kops.k8s.local api-test-kops-k8s-local-11nu02

route-table test.kops.k8s.local rtb-09d815bddba9ac18f

security-group api-elb.test.kops.k8s.local sg-024eb58d334429195

security-group masters.test.kops.k8s.local sg-0e350cfbddc121115

security-group nodes.test.kops.k8s.local sg-0496b38fd1afeb086

subnet us-east-1a.test.kops.k8s.local subnet-052bc61c1612028a0

volume a.etcd-events.test.kops.k8s.local vol-04e6c2a93036535aa

volume a.etcd-main.test.kops.k8s.local vol-08ec18cef94bffa3e

vpc test.kops.k8s.local vpc-08a10ad00d53d9867

Must specify --yes to delete cluster

It will list out all the resources provisioned as part of the kOps cluster. If you are okay to delete, use –yes

$ kops delete cluster test.kops.k8s.local --yes